- Topic

34k Popularity

17k Popularity

44k Popularity

17k Popularity

43k Popularity

19k Popularity

7k Popularity

4k Popularity

114k Popularity

28k Popularity

- Pin

- 🎊 ETH Deposit & Trading Carnival Kicks Off!

Join the Trading Volume & Net Deposit Leaderboards to win from a 20 ETH prize pool

🚀 Climb the ranks and claim your ETH reward: https://www.gate.com/campaigns/site/200

💥 Tiered Prize Pool – Higher total volume unlocks bigger rewards

Learn more: https://www.gate.com/announcements/article/46166

- 📢 ETH Heading for $4800? Have Your Say! Show Off on Gate Square & Win 0.1 ETH!

The next bull market prophet could be you! Want your insights to hit the Square trending list and earn ETH rewards? Now’s your chance!

💰 0.1 ETH to be shared between 5 top Square posts + 5 top X (Twitter) posts by views!

🎮 How to Join – Zero Barriers, ETH Up for Grabs!

1.Join the Hot Topic Debate!

Post in Gate Square or under ETH chart with #ETH Hits 4800# and #ETH# . Share your thoughts on:

Can ETH break $4800?

Why are you bullish on ETH?

What's your ETH holding strategy?

Will ETH lead the next bull run?

Or any o

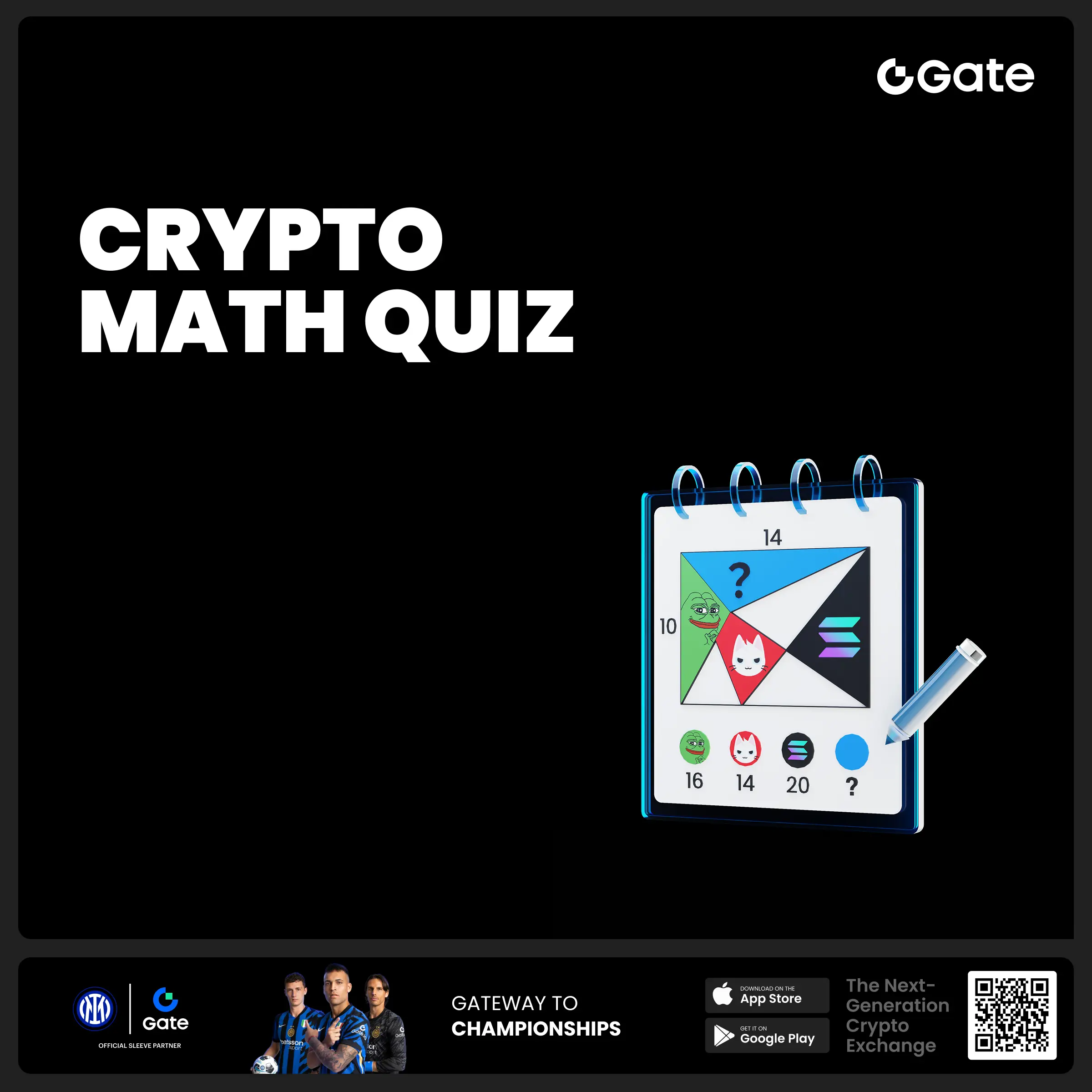

- 🧠 #GateGiveaway# - Crypto Math Challenge!

💰 $10 Futures Voucher * 4 winners

To join:

1️⃣ Follow Gate_Square

2️⃣ Like this post

3️⃣ Drop your answer in the comments

📅 Ends at 4:00 AM July 22 (UTC)

- 🎉 #Gate Alpha 3rd Points Carnival & ES Launchpool# Joint Promotion Task is Now Live!

Total Prize Pool: 1,250 $ES

This campaign aims to promote the Eclipse ($ES) Launchpool and Alpha Phase 11: $ES Special Event.

📄 For details, please refer to:

Launchpool Announcement: https://www.gate.com/zh/announcements/article/46134

Alpha Phase 11 Announcement: https://www.gate.com/zh/announcements/article/46137

🧩 [Task Details]

Create content around the Launchpool and Alpha Phase 11 campaign and include a screenshot of your participation.

📸 [How to Participate]

1️⃣ Post with the hashtag #Gate Alpha 3rd - 🚨 Gate Alpha Ambassador Recruitment is Now Open!

📣 We’re looking for passionate Web3 creators and community promoters

🚀 Join us as a Gate Alpha Ambassador to help build our brand and promote high-potential early-stage on-chain assets

🎁 Earn up to 100U per task

💰 Top contributors can earn up to 1000U per month

🛠 Flexible collaboration with full support

Apply now 👉 https://www.gate.com/questionnaire/6888

Vitalik Buterin: Social media experiment Community Notes is very encrypted

Original Author: vitalik

Compilation of the original text: Deep Tide TechFlow

The past two years have been arguably turbulent for Twitter (X). Last year, Elon Musk purchased the platform for $44 billion, and then overhauled the company’s staffing, content review, business model, and website culture. These changes may be more due to Elon Musk’s soft power, rather than specific policy decisions. Amid these controversial moves, however, a new feature on Twitter is quickly gaining importance and seemingly enjoying favor across the political spectrum: Community Notes.

Community Notes is a fact-checking tool that sometimes attaches contextual annotations to tweets, like the one from Elon Musk above, as a fact-checking and anti-disinformation tool. It was originally called Birdwatch and was first launched as a pilot program in January 2021. Since then, it has gradually expanded, with the most rapid expansion phase coinciding with Elon Musk's takeover of Twitter last year. Today, Community Notes are a regular feature of tweets that get a lot of attention on Twitter, including those addressing controversial political topics. In my opinion, and from my conversations with many people across the political spectrum, these Notes are informative and valuable when they appear.

But what interests me the most is Community Notes, which, while not a "crypto project," is probably the closest instance of "crypto values" we've seen in the mainstream world. Community Notes are not written or curated by some centrally selected expert; instead, anyone can write and vote, and which Notes to display or not to display is determined entirely by an open-source algorithm. The Twitter website has a detailed and comprehensive guide describing how the algorithm works, and you can download the data containing posted Notes and votes, run the algorithm locally, and verify that the output matches what is visible on the Twitter website. While not perfect, it's surprisingly close to the ideal of believable neutrality in rather controversial situations, and is very useful at the same time.

How does the Community Notes algorithm work?

Anyone with a Twitter account that meets certain criteria (basically: active for more than 6 months, no violation history, verified mobile number) can sign up to participate in Community Notes. Currently, participants are being accepted slowly and randomly, but eventually the plan is to allow anyone who is eligible to join. Once accepted, you can first participate in grading existing Notes, and once your grading is good enough (measured by seeing which grading matches the final result for that Note), you can also write your own Notes.

When you write a Notes, the Notes will get a score based on the review of other Community Notes members. These reviews can be viewed as votes along the three levels of "helpful," "somewhat helpful," and "not helpful," but reviews can also contain other labels that play roles in the algorithm. Based on these reviews, Notes is given a score. If the score of Notes exceeds 0.40, then this Notes will be displayed; otherwise, this Notes will not be displayed.

What makes the algorithm unique is how the score is calculated. Unlike simplistic algorithms, which are designed to simply calculate some sort of sum or average of user ratings and use that as the final result, the Community Notes rating algorithm explicitly tries to prioritize those that get positive ratings from people with different perspectives Notes. That is, if people who usually disagree on ratings eventually agree on a particular Note, then that Note will be highly rated.

Let's take a deeper look at how it works. We have a set of users and a set of Notes; we can create a matrix M where cell Mij represents how the i-th user rated the j-th Notes.

For any given Note, most users have not rated that Note, so most entries in the matrix will be zero, but that's okay. The goal of the algorithm is to create a four-column model of users and Notes, assigning each user two statistics, which we can call "Friendliness" and "Polarity", and assigning each Notes two statistics, we Call it "usefulness" and "polarity". The model tries to predict the matrix as a function of these values, using the following formula:

Note that here I introduce the terminology used in the Birdwatch paper, as well as my own to provide a more intuitive understanding of what the variables mean without getting into mathematical concepts:

The algorithm uses a fairly basic machine learning model (standard gradient descent) to find the best variable values to predict the matrix values. The usefulness assigned to a particular Note is the final score for that Note. A Note will be displayed if its usefulness is at least + 0.4.

The core ingenuity here is that "polarity" absorbs the characteristics of a Notes, which cause it to be liked by some users and disliked by other users, while "usefulness" only measures the characteristics of a Notes, These features lead to it being liked by all users. Selecting usefulness thus identifies Notes that are approved across tribes and excludes Notes that are hailed in one tribe but disliked by another.

The above only describes the core part of the algorithm. In fact, there are many additional mechanisms added on top of it. Fortunately, they are described in public documentation. These mechanisms include the following:

All in all, you end up with some pretty complex Python code totaling 6282 lines spread across 22 files. But it's all open, and you can download the Notes and scoring data and run it yourself to see if the output matches what's actually happening on Twitter.

So what does this look like in practice?

Probably the biggest difference between this algorithm and the method of simply taking the average score from people's votes is the concept of what I call "polarity" values. The algorithm documentation refers to them as fu and fn, using f for factor because the two terms multiply each other; the more general term is partly because of the eventual desire to make fu and fn multidimensional.

Polarity is assigned to users and Notes. The link between the user ID and the underlying Twitter account is intentionally kept private, but Notes is public. In fact, at least for the English dataset, the algorithm-generated polarity correlates very closely with left and right.

Here are some Notes examples with a polarity around -0.8:

Note that I'm not cherry-picking here; these are actually the first three rows in the scored_notes.tsv spreadsheet that I generate when I run the algorithm locally, and their polarity scores (called coreNoteFactor 1 in the spreadsheet ) less than -0.8.

Now, here are some Notes with a polarity around +0.8. As it turns out, many of them were either people talking about Brazilian politics in Portuguese or Tesla fans angrily rebutting criticism of Tesla, so let me cherry-pick a little and find some Notes that don't fall into either category:

As a reminder, the "left vs. right division" is not hardcoded into the algorithm in any way; it is discovered computationally. This suggests that if you apply this algorithm to other cultural contexts, it can automatically detect their main political divisions and build bridges between them.

Meanwhile, the Notes that get the highest usefulness look like this. This time, since the Notes actually show up on Twitter, I can just screenshot one:

And another:

For the second Notes, it deals more directly with highly partisan political themes, but it is a clear, high-quality, and informative Notes that gets a high score for it. Overall, the algorithm seems to work, and it seems feasible to verify the output of the algorithm by running the code.

What do I think about the algorithm?

What struck me most when analyzing this algorithm was its complexity. There's an "academic paper version" that uses gradient descent to find the best fit to a five-term vector and matrix equation, and then there's the real version, a complex series of algorithmic executions with many different executions, and a lot of arbitrary coefficient.

Even academic paper versions hide underlying complexities. The equation it optimizes is a negative quartic (because there is a quadratic fu*fn term in the prediction formula, and the cost function measures the square of the error). While optimizing a quadratic equation over any number of variables almost always has a unique solution, which you can figure out with fairly basic linear algebra, optimizing a quartic equation over many variables usually has many solutions, so multiple rounds of the gradient descent algorithm Different answers may be obtained. Small input changes can cause the descent to flip from one local minimum to another, changing the output results significantly.

The difference between this and the algorithms I helped develop, such as quadratic financing, is to me like the difference between an economist's algorithm and an engineer's algorithm. Economists' algorithms, at best, focus on simplicity, are relatively easy to analyze, and have clear mathematical properties that state that they are optimal (or least bad) for the task at hand, and ideally prove that How much damage someone can do in trying to take advantage of it. An engineer's algorithm, on the other hand, is derived through an iterative trial-and-error process of seeing what works and what doesn't in the engineer's operating environment. An engineer's algorithm is pragmatic and gets the job done; an economist's algorithm doesn't completely lose control in the face of the unexpected.

Or, as respected internet philosopher roon (aka tszzl) put it in a related thread:

Of course, I would say that the "theoretical aesthetics" aspect of cryptocurrencies is necessary in order to be able to accurately distinguish between those protocols that are truly trustless and those that look good and work superficially well, but actually require trusting some centralized actor, Or even worse, it could be an outright scam.

Deep learning is effective under normal conditions, but it has inevitable weaknesses to various adversarial machine learning attacks. If done right, technical traps and highly abstract ladders can counter these attacks. So, I have a question: Can we turn Community Notes itself into something more like an economic algorithm?

To see in practice what this means, let's explore an algorithm I devised for a similar purpose a few years ago: Pairwise-bounded quadratic funding.

The goal of pairwise-bounded quadratic funding is to fill a loophole in "conventional" quadratic funding, where even if two players collude with each other, they can contribute very high amounts to a bogus project, have the funds returned to them, and Get big subsidies that drain your entire pool of money. In Pairwise-bounded quadratic funding, we assign a finite budget M to each pair of participants. The algorithm iterates over all possible pairs of participants, and if the algorithm decides to add a subsidy to some project P because both participant A and participant B support it, then this subsidy is deducted from the budget allocated to that pair (A,B) . Therefore, even if k participants collude, the amount they steal from the mechanism is at most k *(k-1)*M.

This form of the algorithm does not work well in the context of Community Notes, since each user casts only a small number of votes: on average, the common vote between any two users is zero, so by simply looking at each pair individually Users, the algorithm has no way of knowing the polarity of users. The goal of a machine learning model is precisely to attempt to "populate" a matrix from very sparse source data that cannot be directly analyzed in this way. But the challenge with this approach is that extra effort is required to avoid highly volatile results in the face of a small number of bad votes.

Can Community Notes really be able to resist the Left and the Right?

We can analyze whether the Community Notes algorithm is actually resistant to extremes, that is, whether it performs better than a naive voting algorithm. This voting algorithm is already somewhat resistant to extremes: a post with 200 likes and 100 dislikes will perform worse than a post with only 200 likes. But does Community Notes do better?

From an abstract algorithm point of view, it's hard to say. Why can't a polarizing post with a high average rating get strong polarity and high usefulness? The idea is that if those votes are conflicting, the polarity should "absorb" the feature that caused the post to get a lot of votes, but does it actually do that?

To check this, I ran my simplified implementation for 100 rounds. The average result is as follows:

In this test, "good" Notes were rated +2 by users of the same political affiliation, +0 by users of the opposite political affiliation, and "good but more extreme" Notes were rated by users of the same affiliation rated +4 in , and -2 in users of the opposite faction. Although the average score is the same, the polarity is different. And in fact, the average usefulness of "good" Notes seems to be higher than that of "good but more extreme-leaning" Notes.

Having an algorithm closer to the "economist's algorithm" would have a clearer story of how the algorithm punishes extremes.

How useful is all this in a high stakes situation?

We can learn about some of these by looking at a specific situation. About a month ago, Ian Bremmer complained that a tweet about a Chinese government official added a highly critical Community Note?item_id=888" target="_blank" rel="noopener noreferrer">See more? item_id= 888" target="_blank" rel="noopener noreferrer">See more? item_id= 888" target="_blank" rel="noopener noreferrer">See more? item_id= 888" target="_blank" rel="noopener noreferrer">See more? item_id= 888" target="_blank" rel="noopener noreferrer">See more? item_id= 888" target= "_blank" rel="noopener noreferrer">See more? item_id= 888" target="_blank" rel="noopener noreferrer">See more? item_id= 888" target="_blank" rel="noopener noreferrer">See more? item_id= 888" target="_blank" rel="noopener noreferrer">See more? item_id= 888" target="_blank" rel="noopener noreferrer">See more? item_id= 888" target="_blank" rel="noopener noreferrer">See more? item_id= 888" target="_blank" rel="noopener noreferrer">View More, but the Notes have been removed.

This is a daunting task. Mechanism design is one thing in an Ethereum community environment where the biggest complaint might just be $20,000 going to an extreme Twitter influencer. It's a different story when it comes to political and geopolitical issues that affect millions of people, and everyone tends to reasonably assume the worst possible motives. But interacting with these high-stakes environments is essential if mechanic designers want to have a significant impact on the world.

In the case of Twitter, there is an obvious reason to suspect centralized manipulation as the cause of Notes deletion: Elon Musk has many business interests in China, so it is possible that Elon Musk forced the Community Notes team to intervene in the output of the algorithm and delete This particular Notes.

Luckily, the algorithm is open-source and verifiable, so we can actually get to the bottom of it! Let's do this. The URL of the original tweet is the number 1676157337109946369 at the end is the ID of the tweet. We can search for that ID in the downloadable data and identify the specific row in the spreadsheet that has the above Notes:

Here we have the ID of Notes itself, 1676391378815709184 . We then search for this ID in the scored_notes.tsv and note_status_history.tsv files generated by running the algorithm. We got the following result:

The second column in the first output is the Notes' current rating. The second output shows the Notes' history: its current status is in column seven (NEEDS_MORE_RATINGS), and the first status it received that wasn't NEEDS_MORE_RATINGS is in column five (NEEDS_MORE_RATINGS ). CURRENTLY_RATED_HELPFUL). So we can see that the algorithm itself first showed the Notes and then removed them after their ratings dropped slightly - no central intervention seems to be involved.

We can also look at this another way by looking at the vote itself. We can scan the ratings-00000.tsv file to isolate all ratings for this Notes and see how many are rated HELPFUL and NOT_HELPFUL:

However, if you sort them by timestamp and look at the top 50 votes, you'll see that there are 40 HELPFUL votes and 9 NOT_HELPFUL votes. So we came to the same conclusion: Notes was rated more positively by its initial audience and less by its later audience, so its rating started high and declined over time get lower.

Unfortunately, it's hard to explain exactly how Notes change status: it's not a simple matter of "previously rated above 0.40, now rated below 0.40, so it's removed". Instead, the high number of NOT_HELPFUL replies triggers one of the exception conditions, increasing the usefulness score that Notes needs to stay above the threshold.

This is another great learning opportunity that teaches us a lesson: making a trustworthy neutral algorithm truly trustworthy requires keeping it simple. If a Notes goes from being accepted to not being accepted, there should be a simple and clear story as to why this is the case.

Of course, there is another completely different way this vote can be manipulated: Brigading. Someone who sees a Notes they don't approve of can appeal to a highly engaged community (or worse, a legion of fake accounts) to rate it NOT_HELPFUL, and it probably doesn't take many votes to get the Notes From "useful" to "extreme". More analysis and work is required to properly reduce the algorithm's vulnerability to such coordinated attacks. A possible improvement would be to not allow any user to vote on any Notes, but instead randomly assign Notes to graders using the "for you" algorithm recommendation, and allow graders to grade only those Notes they are assigned to.

Community Notes Not "brave" enough?

The main criticism I see of Community Notes is basically that it doesn't do enough. I saw two recent articles mentioning this. To quote one of the articles:

The process suffers from a serious limitation in that for Community Notes to become public, they must be generally accepted by a consensus of people across the political spectrum.

"It has to have an ideological consensus," he said. "That means people on the left and people on the right have to agree that the note has to be attached to the tweet."

Essentially, he said, it requires "a cross-ideological agreement on truth that is nearly impossible in an increasingly partisan environment."

It's a tricky question, but ultimately I'm inclined to think that it's better to have ten tweets of misinformation go free than one tweet to be unfairly annotated. We’ve seen years of fact-checking that’s brave and from a “actually we know the truth, we know one side lies more often than the other” perspective. What will happen?

To be honest, there is a fairly widespread distrust of the very concept of fact-checking. Here, one strategy is to say: ignore those critics, remember that fact-checkers do know the facts better than any voting system, and stick with it. But going all-in on this approach seems risky. There is value in building intertribal institutions that are at least somewhat respected by all. Like William Blackstone's dictum and the courts, I feel that maintaining that respect requires a system that errs by omission rather than by voluntary error. So it seems worthwhile to me that at least one major organization is taking this different path and seeing its rare inter-tribal respect as a precious resource.

Another reason I think it's okay for Community Notes to be conservative is that I don't think every misinformed tweet, or even most misinformed tweets, should receive a corrective note. Even if less than one percent of misinformed tweets are annotated to provide context or correction, Community Notes still provide an extremely valuable service as an educational tool. The goal is not to correct everything; rather, the goal is to remind people that there are multiple viewpoints, that some of the posts that seem convincing and engaging in isolation are actually quite wrong, and that you, yes, you can usually do basic internet Search to verify it's wrong.

Community Notes cannot be, nor is it intended to be, a panacea for all problems in public epistemology. Whatever problems it doesn’t solve, there’s plenty of room for other mechanisms to fill in, whether it’s novelty gadgets like prediction markets, or established organizations hiring full-time staff with domain expertise to try to fill the gaps.

in conclusion

Community Notes is not only a fascinating experiment in social media, but also a fascinating example of an emerging type of mechanism design: mechanisms that consciously try to identify extremes and tend to foster crossover rather than perpetuate division.

Two other examples in this category that I am aware of are: (i) the paired quadratic funding mechanism used in Gitcoin Grants, and (ii) Polis, a discussion tool that uses clustering algorithms to help the community identify common Popular statements span people who often have different opinions. This field of mechanism design is valuable, and I hope we see more academic work in this area.

The algorithmic transparency that Community Notes provides isn't exactly fully decentralized social media - if you don't agree with how Community Notes works, there's no way to see a different algorithmic perspective on the same content. But this is the closest that hyperscale applications will get in the next few years, and we can see that it already provides a lot of value, both preventing centralized manipulation and ensuring that platforms that don't engage in such manipulation get what they deserve recognized.

I look forward to seeing Community Notes and many algorithms of a similar spirit develop and grow over the next decade.