- Topic

611 Popularity

4k Popularity

284 Popularity

30k Popularity

31k Popularity

50k Popularity

18k Popularity

29k Popularity

158k Popularity

1833k Popularity

- Pin

- 🎊 ETH Deposit & Trading Carnival Kicks Off!

Join the Trading Volume & Net Deposit Leaderboards to win from a 20 ETH prize pool

🚀 Climb the ranks and claim your ETH reward: https://www.gate.com/campaigns/site/200

💥 Tiered Prize Pool – Higher total volume unlocks bigger rewards

Learn more: https://www.gate.com/announcements/article/46166

- 📢 ETH Heading for $4800? Have Your Say! Show Off on Gate Square & Win 0.1 ETH!

The next bull market prophet could be you! Want your insights to hit the Square trending list and earn ETH rewards? Now’s your chance!

💰 0.1 ETH to be shared between 5 top Square posts + 5 top X (Twitter) posts by views!

🎮 How to Join – Zero Barriers, ETH Up for Grabs!

1.Join the Hot Topic Debate!

Post in Gate Square or under ETH chart with #ETH Hits 4800# and #ETH# . Share your thoughts on:

Can ETH break $4800?

Why are you bullish on ETH?

What's your ETH holding strategy?

Will ETH lead the next bull run?

Or any o

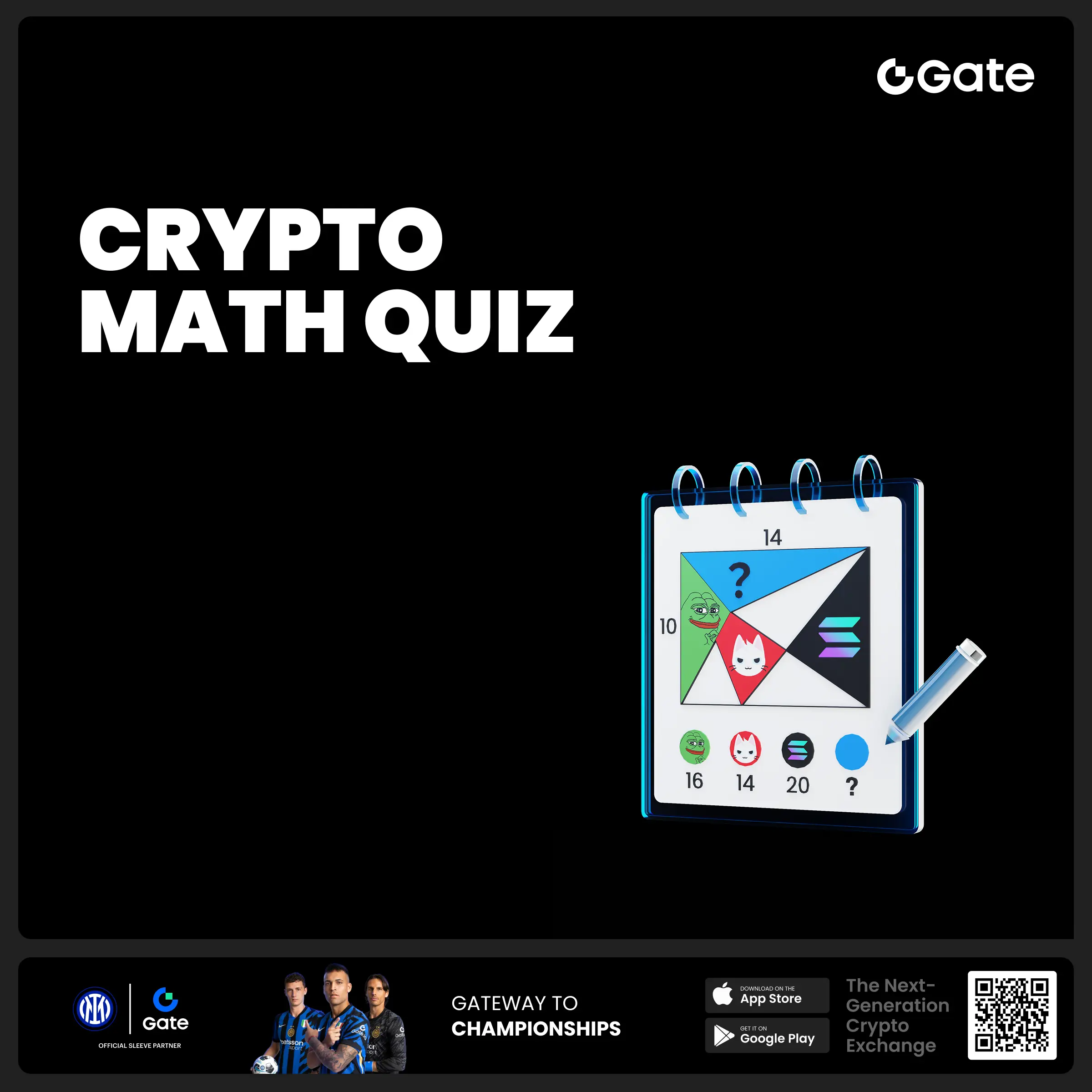

- 🧠 #GateGiveaway# - Crypto Math Challenge!

💰 $10 Futures Voucher * 4 winners

To join:

1️⃣ Follow Gate_Square

2️⃣ Like this post

3️⃣ Drop your answer in the comments

📅 Ends at 4:00 AM July 22 (UTC)

- 🎉 [Gate 30 Million Milestone] Share Your Gate Moment & Win Exclusive Gifts!

Gate has surpassed 30M users worldwide — not just a number, but a journey we've built together.

Remember the thrill of opening your first account, or the Gate merch that’s been part of your daily life?

📸 Join the #MyGateMoment# campaign!

Share your story on Gate Square, and embrace the next 30 million together!

✅ How to Participate:

1️⃣ Post a photo or video with Gate elements

2️⃣ Add #MyGateMoment# and share your story, wishes, or thoughts

3️⃣ Share your post on Twitter (X) — top 10 views will get extra rewards!

👉

Jan Leike: How will OpenAI achieve super-alignment in 4 years?

By Daniel Filan @AXRP

Source: Overseas Unicorn

Recommended by: Cage Compiler: wenli, Yanxi Typesetting: Mengxi, Scout

OpenAI announced its "Superalignment" plan at the beginning of last month, and announced that it will devote 20% of its total computing power to this new direction at one time. OpenAI's co-founder and chief scientist Ilya Sutskever and the original alignment team leader Jan Leike will jointly lead this new project, with the goal of solving the core technical challenges of superintelligence alignment within 4 years to ensure that humans can control superintelligence.

In order to achieve this, OpenAI needs to first train an "automatic aligner that is at human level", and then use this "automatic aligner" to achieve alignment with super intelligence. According to the article Introducing Superalignment, "Automatic aligner" The design of "Aligner" also involves enabling AI to evaluate and supervise AI, verifying the security of the system based on explainability, and using unaligned models to perform perturbation testing on the system.

This article is compiled from an interview with Jan Leike, and it is Jan Leike's more detailed technical thinking on how OpenAI can achieve "super alignment".

**The following is the table of contents of this article. It is recommended to read it in combination with the key points. **

👇

01 Superalignment Team

02 Let the model "autonomously align"

03 Superalignment schedule

04 Generalization

05 Stay optimistic about Superalignment

01.Superalignment Team

**Daniel Filan: Can you introduce the Superalignment team first? **

Jan Leike: The Superalignment team's goal is to solve the super-intelligent alignment problem in the next 4 years. Ilya Sutskever, co-founder and chief scientist of OpenAI, will also join the team and co-lead this project with me. In addition, OpenAI will also devote 20% of its computing resources to this topic. We are also actively recruiting talents to join this project team. **We very much hope to attract machine learning experts and engineers who have not engaged in alignment research. Perhaps these people can exert great potential on this issue. **

We designed a preliminary working framework. The core idea is to first train an automated human-level alignment researcher (automated human-level alignment researcher), and then let it continue to study how to complete the work of Superintelligence alignment. So one of the key things we have to do is figure out how to "align" this auto-aligner.

**Daniel Filan: How big will this new team be? **

Jan Leike: We have about 20 people now, and it may reach 30 people by the end of this year. In the next four years, the team will probably not exceed 100 people, but the way this team expands may be Have millions of "virtual humans", or at least as many "virtual humans" as OpenAI employees way to do alignment). From this level, we will definitely expand on a large scale in the future.

**Daniel Filan: You mentioned that OpenAI will provide this team with 20% of computing power support. What does this 20% mean? **

Jan Leike: For OpenAI, allocating 20% of the computing power to this team is not a small number. This is definitely our largest investment in alignment to date, and probably exceeds all others. sum of investments. **So, in that sense, 20% of computing resources is quite a large percentage for OpenAI. In addition, if we make this figure extremely large, some people will definitely question "Can OpenAI really do this?" But in fact, for OpenAI, if we want to continue to develop the most cutting-edge models and analyze the most advanced AI The system is pre-trained, which will require a lot of computing resources.

Jan Leike: The alignment team established last year has two parts, one is called "Practical alignment" and the other is called "Scalable alignment". The Pragmatic Alignment team focuses on the alignment of GPT-4, and the Scalable Alignment team aims to study alignment problems that we have not yet solved. With the release of ChatGPT and subsequent success, the importance of ChatGPT and the scale of the product are constantly increasing, requiring a larger volume of RLHF and models to ensure that the functions and experience of the product are sufficiently complete, and the alignment team is no longer fit to do this.

The reason why we choose the name Superalignment is because we want to emphasize that what we are studying at this stage is actually a problem that has not yet appeared. Our research is relatively forward-looking and future-oriented.

**Daniel Filan: How do you view the attempts of people or teams outside OpenAI on alignment? **

**Jan Leike: **There are many people or teams outside of OpenAI who are also trying related work, especially DeepMind and Anthropic. To some extent, we are all trying to solve the same problem, so we end up doing similar work. It's also normal. There are other works on interpretability and scalable supervision.

In a way, we're actually running the risk of duplicating a bunch of work, so ideally trying to figure out how to coordinate better or collaborate more. But if everyone is doing the same thing, it can avoid "group thinking", because if each laboratory wants to solve these problems independently, it will naturally doubt the results of other laboratories, and the negative side will produce "group thinking". Either-or effect: people are unwilling to use technologies invented elsewhere, and people will naturally think that technologies other than their own are not good, or look at them with some kind of prejudice.

So it's not in a good balance right now, and while there's a reason to think that all alignment people should be in one place and work together in some way, that's the reality because by their very nature cutting edge AI labs have motivation Invest a lot of resources in the matter of "alignment". This has also become evident with the success of RLHF, which makes the models more commercially viable, making it more attractive to invest in research into such techniques.

**Daniel Filan: How is the OpenAI Superalignment Team’s approach different? **

Jan Leike: We're really focused on how to align this auto-aligner, rather than figuring out how to align various tasks. So, at least on this issue, we're not too worried about the alignment tax. I don't think other labs emphasize this goal or direction in this way.

Alignment tax:

Also known as a security tax, it refers to the additional cost of ensuring alignment of AI systems. The alignment tax under RLHF mentioned in this article means that in order to do RLHF, the ability of the base model is lost in order to achieve alignment, such as increased development time, additional calculations or performance degradation.

**We are very optimistic about trying all the scalable alignment techniques to see which ones work best and trying to find ways to empirically compare them. Other labs have specific scalable surveillance technologies that they are very excited about and they are trying to use those technologies as well. In addition, in terms of interpretability, we are adopting automated interpretability methods and are also promoting them vigorously, but other laboratories have not yet paid so much attention to this method. **

The other thing we really want to do is use computation to advance alignment, which is one of our main strategies, especially in terms of scalable supervision, we really want to figure out, how can we get more computing power to send out better supervisory signals? What opportunities do we have? How to make the criticism model (Critique model) better? How to use more computing power to make the supervision signal stronger? Automated interpretability (Automated interpretability) is a very simple method, we only need to invest a lot of computing power to make progress on this problem.

Critique model:

is an independent language model. It reviews the results of the first AI system before writing reviews.

In addition, there are automated calibration studies: if this can be done, we can obtain more alignment results by investing more computing power. But since what we really want to do is convert the amount of computing power into alignment capabilities, so now we need a lot of computing power, and this is why OpenAI is willing to use 20% of computing power for alignment. This basically says that if we do find out about this auto-aligner and find out that we need more computing power, we can use more computing power to run it. This also means that the strategy of converting computing power into alignment is successful and will be supported by OpenAI.

02. Let the model "autonomously align"

What is an "Auto Aligner"

**Daniel Filan: What is an "automated human-level alignment researcher"? **

**Jan Leike: Our goal is to use automated systems to disassemble and distribute the tasks in the alignment work as much as possible. **

For language models or other AI systems, the work they can accomplish is not 100% consistent with humans. For example, LLMs may be better than humans at things like translating or answering factual questions, but they may not be as good at arithmetic calculations or some other tasks. **So the question is, in what order and what tasks do we need to assign to AI to handle it, so as to free up the limited energy of human researchers? **As a result, human teams will be able to complete critical work more efficiently, while AI will also take on an increasing number of auxiliary tasks.

**In general, the proportion of AI participating in the work will be higher and higher, while human researchers will pay more attention to those tasks that are not taken over by AI, and accelerate the research of superintelligence alignment more practically through human-machine collaboration. **

**Daniel Filan: So it is not to use AI to replace some human employees in the OpenAI alignment team, but to use AI to complete a specific type of work that everyone is doing, and then replace it with AI step by step More tasks to perform? **

Jan Leike: Yeah, I think if we want this system to be productive enough, 99% or 99.9% of the tasks should be automated so we can get 10x, 100x, maybe even 1000x double the research results.

I'll broadly divide the "tasks" mentioned here into two broad categories. One is more traditional machine learning engineering research tasks, the purpose of which is to help improve the capabilities of AI systems, such as implementing various ML experiments and collecting experimental results.

The other category is what must be done in order to achieve Superintelligence alignment. This type of problem is relatively larger and higher-level (high-level). For example, in order to improve scalability supervision (Scalable Oversight), how do we decide which experiments to run? Or how to make progress on interpretability. Of course, there must be some very specific questions that need to be answered. For example, when a certain research reaches a certain stage, it is necessary to clarify a series of problems that need to be solved in the follow-up, such as very detailed questions.

Scalable Oversight:

The goal of scalability supervision is to ensure that model capabilities can still be consistent with human expectations and continue to improve and learn after exceeding human levels. This requires researchers to think about how to increase the model's capacity, align the model's values, and continuously monitor the model's performance. The focus of scalable supervision is how to continuously provide reliable supervision to the model. This supervision can be in various forms, such as labels, reward signals, or criticisms.

I expect that machine learning can do the first type of tasks, which is designing and automatically running experiments, very well, and the unique work we are doing today to accelerate the progress of alignment is to figure out how to automate the second type. method of the task. **

**Daniel Filan: The second type of task seems to be a whole-process task? Not just figuring out research directions, figuring out what might be helpful, even down to "what script do I run now". **

**Jan Leike: **This question can actually be asked like this: **Since alignment research is similar to traditional ML research to a large extent, what other tasks of the second type can be done? **

**I think there is actually a lot involved in the second type of tasks, and the research leverage in this part is very large. **Because from the perspective of research topics, we have not even reached a consensus on "how to define alignment". Even industry experts are still wondering about "the technical route that is most likely to realize alignment" or "what work should be done next" “There are differences on these issues. Therefore, if the alignment can be accelerated, the impact must be huge. This is also the vision and direction we told researchers when we called on researchers to join the OpenAI Superalignment team.

At this stage, we are still solving some basic problems, and there is still a lot of work to be done on alignment research. We don't know how to align superintelligence, and even just aligning AI systems with higher than human intelligence is quite difficult.

**Daniel Filan: You mentioned the concept of a human-level automatic aligner, but it seems that most things in AI are not quite human-level. How important is "human-level" in this goal? Is it a good thing or a bad thing if AI does surpass human performance on some of the tasks you mentioned? **

**Jan Leike: I think the key to this question is how risky it is to have this kind of human-level system in alignment research. **

It is not terrible that the AI system has a lot of knowledge, but when this system takes over some (in the long run, most) of the alignment research, we need to consider whether it will lie to humans? Will AI try to trick us and take over the system?

Because we really don't currently understand how a lot of the model's behavior occurs, the real question we face is what kind of skills do we need to understand its behavior and risks, and is it comparable to what we need to build an automated researcher for hyperalignment? How do the skills compare?

If we probe this further, what are our real concerns? That might be, is the model spinning a series of lies that can deceive humans? Are models already fooling humans? Are you pretending to do something or believe something when in reality it is directed towards another goal?

Therefore, it is also critical to evaluate whether a model will jailbreak (self-exfiltration): how capable is the model of being able to break the system's security precautions, obtain model weight parameters, and try to replicate them elsewhere on the Internet? Or, is it possible for the model to download this data and send it elsewhere by convincing a human engineer with access to the weights? We can also measure the model's ability in this area. In these critical aspects, I hope that the model's ability is not too strong.

**Daniel Filan: A human-level automatic aligner needs to be very smart, creative, and capable of task planning. It must also be very good at thinking about alignment-related issues. In this case, there are voices that such a powerful tool itself It is very threatening to humans. If the task is to align automatic alignment researchers, are there any other problems it needs to solve? **

**Jan Leike: I think this is ultimately an experience-driven thing. **

We might start by thinking about this at a macro level. For example, it is quite obvious that once the model's ability is improved, we will naturally let the model help us to achieve some alignment research work, and while the model helps us to conduct research, its own ability has been improved, so from As a result, we can use this to quickly train a more powerful model.

This story sounds exciting at first glance, but in practice it’s actually quite complicated. First of all, model pre-training usually takes several months, not weeks, so we need to use this generation of models until the new generation of models is born. Another question that doesn’t yet have a clear answer is: Are there still a lot of “low-hanging fruits” when it comes to improving computing power?

I think that compared with alignment, the entire AI community's investment and attention in improving the speed and capabilities of AI are quite large. If we can automate more of these tasks to benefit both communities, then in the scale of the alignment community In smaller cases, the marginal benefit it brings will be higher.

**Daniel Filan: When it comes to evaluating alignment as a research direction, what do you think the long-term goal of this automatic aligner will be? **

Jan Leike: I think language models or artificial intelligence in general are more creative than humans on average. For example, images generated by a diffusion model, or samples from a pre-trained basic model, will definitely find many unexpected things, so the creativity of the model is particularly strong, and it is difficult for us to learn from someone Or a small group of humans, and the model can do this because it has learned all the words that humans have said or all the images on the Internet, so as to complete the sampling on this large-scale distribution, which a single human cannot do to this point.

As far as long-term goals are concerned, I think there is no need to deliberately pursue the so-called long-term at all, because we can first hand over short-term tasks to AI. If they are good at these tasks, that is quite enough. **For example, it can be a very small-scale thing, such as "This is a paper we just wrote. Please make some suggestions for the next step or what new experiments can be implemented." Imagine that we are actually asking a real star AI researcher to ask questions, so they don't have to pursue long-term goals, they just need to help us optimize the next small goals, maybe a few thousand tokens, if they can do this well , can already bring a lot of value to human beings.

**Daniel Filan: This seems to conflict with the goal of automating 99.9% of alignment tasks mentioned earlier? In my opinion, one of the keys to do a good job of alignment research is to continue to think and solve the problem of "what is needed to really get an aligned AI"? **

Jan Leike: That’s right. But what I want to express is **When the system completes these tasks well, it has achieved a lot of value, and what we humans have to do is to combine these tasks. **For example, some tasks are "write the code to implement these experiments", while other tasks are "look at the results and tell me what you saw", or "suggest what to do next". Essentially, once the model has completed these tasks, we can combine them in some general way, just like one would do in Auto-GPT or a language model program, where each task is small and automatically integrated so that the system does not need to deliberately pursue some large, long-term goal.

For example, OpenAI's recent Let's Verify Step by Step uses process-based feedback in mathematics to train a reward model based on human feedback on each step of the proof process, rather than training "whether the system got the correct answer" solution?". This has proven to be more effective because it provides the AI system with a more granular way of learning and more detailed feedback. But in the longer term, can this compete with end-to-end reinforcement learning? We don’t know yet, but at least for now, we can use this detailed breakdown of steps to make the system do a lot of really useful things that humans would do, and then put those things together.

Let's Verify Step by Step:

A study published in May 2023 by Hunter Lightman et al. Focusing on the problem of logical errors that often occur in complex multi-step reasoning tasks of large models, the author compares two methods of result supervision and process supervision: result supervision mainly provides feedback for the final result, while process supervision provides feedback for each intermediate reasoning step. feedback. The study found that process supervision significantly outperformed outcome-supervised training models, especially on mathematical problems. Furthermore, the authors found that active learning significantly improved the effectiveness of process supervision.

**Daniel Filan: One of the mini-tasks you mentioned is "see the results and decide what to do next." If you want to do this, you have to think about which specific project will be most useful in achieving the goal of superintelligence alignment in four years? **

**Jan Leike: You're right. Not through optimization and long-term credit assignment, though, more like adding some broader goals and context to the prompt. **

However, in practical applications, when we improve systems through reinforcement learning (RL) or reinforcement learning based on human feedback (RLHF), we do not actually need to wait until the end of the research project to draw conclusions about whether these methods are effective. Instead, we can use human feedback as a basis for suggesting rewards, simply asking ourselves: “Does this direction look better than any direction I might have thought of on my own?”

** Therefore, I think the overall goal of Superalignment is not to achieve the most powerful automatic alignment under the current technology, but to build a very useful and large-scale application system. Most importantly, we believe that it can achieve alignment, so you can rest assured Leave these tasks to it. **

**Compared with task splitting, there may be a view that only end-to-end training can make the model more capable. But I think this is not so important. In fact, the end-to-end training method not only limits the model ability to a large extent, but also has low efficiency. This is what people usually call "alignment tax". **

The "alignment tax" is an important factor if you want to compete effectively with other companies in the market place: Suppose I'm building a chatbot that does a particularly good job of alignment but seems to be much less capable , which is actually very difficult to compete in the market. But if you have an auto-aligner, that auto-aligner doesn't need to compete in the market, it just needs to be useful to us. So we can accept a higher alignment cost because we have no substitute, or the real substitute is to hire more humans, but this way is not so scalable.

**Daniel Filan: What problems do you hope this automated alignment researcher will solve? **

Jan Leike: It should solve the problem of "how do we tune superintelligence". **Superintelligence alignment The actual solution may be quite different from the alignment we are doing today. **

ChatGPT's solution is to learn a lot from human feedback, namely RLHF (Reinforcement learning from human feedback). The general consensus at this stage is that this approach can be difficult to scale because it fundamentally assumes that humans need to fully understand the details of what the system is doing.

So if you ask the model to do large-scale alignment research, you can imagine a task equivalent to millions of human workloads. It is obviously impossible for humans to view all the data and give detailed feedback. This is quite difficult. , we will definitely overlook many important bugs in this process.

**The technology that the Superalignment team is currently working on is to make RLHF scalable and realize the alignment of the automatic aligner. **This automatic aligner is about the same level as humans. It can replace humans to complete these difficult tasks, but it will not do much differently from humans. These techniques we want to achieve are upgrades or seriousness to previous technology explorations, such as **Scalable supervision is a natural extension of RLHF. **

Scalable supervision is defined as the general combination of ideas and techniques that allow us to use AI to assist humans in evaluating difficult tasks. Supervision can be built from reinforcement learning with human feedback (RLHF).

Typical representatives of scalable supervision include debate, recursive reward modeling (RRM, recursive reward modeling), iterated distillation and amplification, automated market making, etc. There are many new methods emerging.

I think if we're really going to align with superintelligence, and think about systems that are smarter than humans, thinking faster, computing at completely new levels of scale, that's going to bring a whole host of other things The problem, especially because it's going to be super versatile and do a lot of things, and then you have to figure out how to align it, not just align it to the more narrowly distributed research tasks, but everything else. In addition, you need to verify that it is successful through a large number of empirical evaluations.

So right now, not just me, but everyone doesn’t know what the future will look like, but it would be very exciting if there could be some formal verification. Maybe we have found some theoretically guaranteed algorithm, but theory and subsequent practice may be very different, and even I don't think an alignment researcher that is roughly human-level will immediately start solving these problems. Instead, we hope they find ways to better align the next iteration so that, through guidance, we ultimately have a system that helps us fine-tune superintelligence.

**Daniel Filan: Once you have these human-level artificial intelligence alignment researchers, does OpenAI still need a superintelligence alignment team and corresponding employees? **

Jan Leike: That's a good question. I personally would be very excited if it could be replaced by AI. **But historically, the typical scenario is what we mentioned earlier: AI assistants do 99% or 99.9% of the work, and humans take care of the remaining 1% or 0.01%. **In the long run, even if we can no longer truly understand everything that AI does, we still need to ensure that humans should be involved in some way or always be able to control what AI is doing. In other words, there must be a human role to Trying to understand the high-level implications of what AI is doing doesn't necessarily have to be the current OpenAI Superalignment team, because the skill sets required may be very different from what we have now.

**Daniel Filan: OpenAI keeps mentioning in its blog that security is closely related to model capabilities, we need intelligent models to solve alignment problems, but at the same time, we hope not to be changed by model capabilities. There is a passage in Planning for AGI and beyond: "If AGI has enough ability to accelerate its own development, it may cause major changes to occur at an astonishing speed", "We think that relatively slow development of AGI is easier to ensure Safety". If we make a really smart or near-human-level aligner, and then effectively scale up the alignment team by 10 or 100 times, does that end up in a recursive self-improvement loop? **

Jan Leike: This is inevitable. It is impossible to have a recursive self-improvement loop without a massive improvement in alignment. I personally think that the possibility of AI achieving a leap in capabilities is quite high, and we must be prepared for it. If it hadn't happened, I would have been content.

If we look at other AI systems, such as AlphaGo, Dota, or StarCraft, these systems undergo substantial capability iterations almost on a weekly basis. As for exactly what will happen, we can't say for sure yet because there's a lot of uncertainty, but I think we should be prepared for that possibility. When this happens, a very good solution is to have automatically aligned researchers who can actually perform thousands of years' equivalent of work in a week, which humans cannot do.

How to design an automatic aligner

**Daniel Filan: How to implement this human-level automatic aligner? **

Jan Leike: It can be roughly divided into two parts. First, we need a system that is smart enough to perform tasks; on the other hand, we need to align this system to ensure that it can actually perform tasks. These two parts are not completely independent, they are closely related.

I wasn't personally involved in the first part of the research, but I believe it will definitely come true and a lot of people are working to make it possible. There are a lot of different directions for it to go, but you can imagine that as the model gets bigger and bigger, eventually it becomes smart enough.

**What I'm really interested in personally is the second part. The problem we face in the research is: With this very smart pre-trained model, how to make it do the alignment research in the way you want? Or more importantly, and more critically, how do you know if you can trust it enough to carry out the task? **

Our models may try to present results and techniques in a way that, if you decide to implement them, actually enhances the system itself or other related systems and exhibits more power-seeking behavior in the future, or jailbreaks (self-exfiltrate) and other behaviors.

Therefore, we need to align the AI to ensure that it can perform tasks according to our expectations and make full use of its capabilities to achieve the best performance on the task. To do this, a series of alignment training methods need to be developed to ensure that the AI remains aligned while performing tasks.

These are actually two different types of systems. We need to distinguish clearly whether the system is truly aligned or falsely aligned. The former is a system that can work hard to conduct alignment research and truly help us and seek the truth; the latter may have the intention to control the world, jailbreak, or perform other Risk of bad behavior. Therefore, we need to establish mechanisms or methods to distinguish between these two alignments to ensure that we can trust and rely on a system that is truly aligned.

**An important part of our work is to figure out how to distinguish true and false alignments. I call them verification methods. They are not directly used to train AI systems, nor are they directly used as a basis for selecting systems, but provide an independent The signal of is used to judge whether the system belongs to the real aligned system or the false aligned system. **

For example, interpretability research is one such method. If we look inside the model and find some evidence that the model is secretly plotting against us, then the model will fail the validation test and be deemed a falsely aligned system.

Another approach is to generalize the problem from easy to hard, which may be a training or validation technique, depending on how it is set up. This question examines whether the model's ability to generalize from simple problems that we can supervise to difficult problems that we cannot supervise. If the model can be supervised on the easy part and cross-validated on the hard part, then we can determine whether the model is at risk of covert cheating or other behavior.

Approach 1: Scalable Supervision

**Daniel Filan: How is scalable supervision achieved? At present, there is not much consensus on alignment. How to obtain training signals for high-quality alignment research? **

Jan Leike: The lack of consensus really shows once again that alignment is actually difficult to solve. This field is not yet very mature, so we have not gained so much experience summary so far. But I think alignment research has some very important properties that we can exploit for scalable supervision.

Assessing the quality of alignment research may be a better approach than simply studying alignment. This does not mean that research on alignment is easy, and it does not mean that assessing it is easy, but it is much easier to find a paper. For example, this paper has a cool idea, does some cool experiments, and the results are very good. After reading it, you will definitely feel the quality of this related research, which is much easier than completing the work. .

**Thus, the principle "evaluation is easier than generation" is at the heart of many scalable supervision ideas. **For example, if you consider recursive reward modeling, the basic idea is to use an AI assistant to help you evaluate the work of other AI systems: first let the auxiliary AI system align on a relatively simple task, which is used as an evaluation assistant to assist in the evaluation of other AI systems.

Since evaluation is easier than generation, the task of assistive AI systems is relatively simple, especially since humans collaborate with assistive AI systems in the evaluation. Once successful at this task, a combination of humans and auxiliary AI systems can be used to supervise the training of a new AI system on more difficult tasks.

By repeatedly repeating this process, we can continuously expand the range of tasks for which we can effectively supervise AI systems. This approach allows us to take advantage of the relative simplicity of the evaluation task to guide and train the AI system, gradually unlocking a wider range of tasks.

Scalable agent alignment via reward modeling: a research direction:

Jan Leike published a study on recursive reward modeling in 2018, designing appropriate reward functions for applying reinforcement learning algorithms to real-life problems. Additionally, the issue of agent alignment, i.e., how to create agents whose behavior matches the user's intent, is discussed. The team outlines a high-level research direction to address the problem of agent alignment centered on reward modeling, learning reward functions from interactions with users.

**Daniel Filan: That is, by iteratively adding more and more AI knowledge to the evaluation part of the alignment study. By operating in this iterative fashion, the AI system is always given a good training signal. **

Jan Leike: Yes. For example, RLHF is the simplest one and does not require the use of any assistant. Humans will evaluate whether the AI performance is good or not after seeing the results. This is a training signal.

Deep reinforcement learning from human preferences:

A 2017 study by Paul Christiano and Jan Leike. In this work, objectives defined in terms of (non-expert) human preferences between trajectory segments are explored to enable complex reinforcement learning (RL) systems to efficiently interact with real-world environments. Studies have shown that this approach can effectively solve complex reinforcement learning tasks without access to reward functions, including Atari games and simulated robot motion, while providing feedback for less than 1% of the agent's interactions with the environment. This greatly reduces the cost of human oversight.

Next, developing further from the previously described approach, we basically train the simplest assistant model, the critique model. This is an independent language model that observes the output of the first AI system and writes criticisms.

For example, the first AI system wrote a piece of code, and then let’s look at this code: Humans tend to be poor at finding bugs in code, which is why there is so much buggy code in the world. But now if there was a criticism system that could write criticism and point out errors, it would be easy for humans to judge: "This is definitely a bug, we should fix it".

The caveat here is that the task itself is not very clear-cut, since typically the code is written according to some natural language specification. In practice, the meaning of this specification is somewhat unclear, and determining whether an issue is a bug can be ambiguous. But more importantly, by using a critical model as an assistant, you can expand the scope of supervisory tasks. Although there may be some ambiguity and ambiguity about the certainty of problems and bugs in your code, you can still use the output of the critique model to find more problems. This approach allows you to effectively supervise AI systems across a wider range of task domains and expand the scope of supervision.

The very good thing is that there are actually many ways to evaluate the effectiveness of this approach through empirical research. One of the approaches, the one we used in the paper we published last year, is basically a randomized controlled trial with targeted perturbations. Suppose you have a coding task. Although we do not use coding tasks in the paper, the specific content of the task is not important here. Then, you have a response result, which doesn't have to be perfect, but let's assume it is. What you're trying to do is introduce some subtle error or flaw into this response result, making it a not-so-good, but imperceptibly bad response for humans.

The paper mentioned here is Self-critiquing models for assisting human uators published in 2022. This paper focuses on fine-tuning large language models and using behavioral cloning to write natural language reviews. Reviews written by the model can help people spot flaws in the results. In addition, the authors investigate the scale properties of reviews for topic-based summarization and synthesis tasks. Larger models will write more helpful reviews.

**Daniel Filan: How were these bugs introduced? **

Jan Leike: The easiest way is to let humans do it, but in the end we have to let the model complete the process by itself.

First, randomly sample one of the perturbations and then show it to a human, or a human assisted by AI, or any scalable supervised approach. And then see how likely they are to discover it? Or you can ask them directly: "Is there a vulnerability here?" and you can get the frequency with which they find bugs.

In addition, you can directly compare the model's knowledge of the problem and train the model to be a discriminator that can distinguish between good and defective versions. The accuracy of the discriminator represents its performance. Next, the model or the RLHF-improved model is asked to comment on the code. Then observe how often the model mentions code defects in comments. Compare the accuracy of the discriminator with the accuracy of the model for generating reviews. The discriminator accuracy reflects the model's ability to recognize the problem, while the review accuracy reflects the model's awareness of code defects. This is what we call the discriminator-critique gap.

If the difference is large, it means there are a lot of problems that the model can smell but it's not telling us because if we asked it to write a comment, it wouldn't say it. This situation is very concerning, because it means that your scalable oversight is deeply flawed in some way, and a lot of things are slipping under your radar. Or in a sense, this AI assistant is not very cooperative because it is not trying very hard to help you.

On the contrary, a small difference value means that the AI assistant will tell you once it knows that there is a problem with the model. In particular, if a similarly sized model or the same base model tries to implant bugs or place hard-to-find trojans, with small variance values, then we can rule out the possibility of such an attack with greater confidence. Because another copy of the model is likely to write a review that reveals potential problems.

This situation relies on the assumption that discriminator training can actually eliminate this situation from the model. I think we have good reason to believe this, since fine-tuning a discriminator essentially gives you access to all activations in the model and fine-tunes all the weights simultaneously. Therefore, if knowledge exists somewhere in the model's activations, fine-tuning the discriminator should be able to extract this knowledge, provided the fine-tuning is done correctly and there is enough data.

You can actually do some backward calculations to determine how much data you need. Suppose there is a single neuron in the model that finds problems with the code. To find and identify this neuron, the number of data points you need is roughly the logarithm of the total number of neurons. This means that relatively few data points are enough to narrow down and find neurons with a specific function. This estimate shows that with discriminator training and enough data, it is possible to discover the functions of specific neurons in the model.

**Daniel Filan: That's one question I have about the whole plan as well. The reason we don't like RLHF is that it may not be able to distinguish between what humans think is correct and what "sounds good", because humans are not good at criticism. **

The idea of recursive reviews is to continuously improve the quality of reviews by training discriminators and reviewers. One way is that we train something that can write really good comments, and then we work with it to train something that's even more capable of commenting. In this case, we gradually increased the requirements for review quality to achieve better results. But is there another situation where we train a thing, inadvertently train it to write fake comments that sound good to us, and then we work with it to further improve our ability to discern good-sounding comments. This can lead to us focusing more on things that just sound good but are actually problems, rather than paying attention to the problems that actually exist.

Jan Leike: Yes, I agree that this is a very important and legitimate question. When exploring applications of scalable supervision, it becomes crucial to understand one of its key issues. Therefore, I am very excited to actually apply these techniques and try them out empirically, because I believe that through practice we will be better able to evaluate the effectiveness of these methods.

A concrete and simple way to measure this effect is to deliberately train a model with deceptive alignments and then observe whether scalable supervision is able to identify and flag such deceptive behavior, or how difficult it is to detect... ...or how much effort we need to expend in order for a model to successfully deceptively align itself to evade our scalable supervision.

**Daniel Filan: Yes, I was thinking of a "sandwiching experiment" for testing AI answers to legal questions. In this experiment, some non-legal people were asked to pose legal questions, and then some people who were actually well-versed in the law were asked to check whether the AI was fooling the non-legal people. **

Jan Leike: These experiments are really interesting, and there has been some interesting research in this area. Some of the basic issues you point out do need to be considered.

First, you mentioned the issue of trust in experts. Because experts sometimes make mistakes, there may be some uncertainty in relying on expert judgment, which is indeed an important consideration. Additionally, it is critical to ensure there is sufficient domain overlap between experts and tasks so that they can provide accurate assessments and feedback.

Another problem is that for some tasks we may not know the underlying facts or truth. Even in a field like alignment research, we may have different perspectives and perceptions. This makes accurate assessment in these tasks more difficult. Furthermore, collecting large amounts of data can be an expensive task due to the limited number of experts and high time costs.

So in general, I'd like to have a method of assessment that doesn't rely on the assumption that we already have the ground truth. This approach can be evaluated without prior knowledge and can be applied to tasks of various difficulty levels, which is why I am critical of using these randomized controlled trials to perform targeted perturbations or measure discriminator-criticism gaps. Reasons to get excited about prospects.

**Daniel Filan: Yes, though, when measuring the discriminator-critic gap, you do need an actual discriminator, not just a discriminator that distinguishes "looks wrong" from "looks okay" device. **

**Jan Leike:**You are saying that you can introduce defects into artificial intelligence systems, right? To some extent, this method may be better than human evaluation because it is closer to the real distribution that artificial intelligence systems face in practical applications. By using this flawed data, the discriminator can be fine-tuned, and we can establish a ground truth if we believe that the flawed version is actually worse. We can observe why things go bad and verify them to understand them better.

**Daniel Filan: Although an artificial intelligence system may make us think something is good, it is not necessarily good; similarly, if an artificial intelligence system makes us think something is bad, then in fact It may be really bad, or the performance may be degraded. Anyway, if AI makes you think this is bad, maybe it's easier to help us check it out? **

Jan Leike: Yes, I see what you mean. In this case, I probably shouldn't use the term "ground truth" because it's not really ground truth, like nothing is really true, but there's a lot you can do to make You have a lot of confidence in the true value, and that doesn't necessarily make the task of finding problems any easier.

Ground truth:

In supervised learning, data labels are usually in the form (x, t), where x is the input data and t is the label. The correct t mark is ground truth, which can be understood as the reference standard and the true value in the sense of reference, while the wrong t mark is not.

Approach 2: Search for bad behavior and internal structure

**Daniel Filan: In OpenAI’s article introducing Superalignment, one of your alignment pipelines is to automatically search for model behaviors that may cause problems (robustness) and internal structures that may cause problems (automatic interpretability). At this point, what issues do you think the superalignment team will solve next? **

**Jan Leike: Interpretability without a doubt. In a sense, explainability is really hard. We don't have any major results on language models yet, and it can be said that interpretability does bring us a lot of inspiration or adds a lot of value, because our understanding of the model and the internal situation is still rudimentary. **

**Daniel Filan: The academic community has done some interpretable work on language models. For example, the work of ** In-context Learning and Induction Heads **, and the work of indirect object identification (Indirect Object Identification), can at least perform some type of indirect object identification. I want to know, besides these, what else do you need to reach your ideal end point? **

• In-context Learning and Induction Heads

Published in 2022, this work focuses on relevant security issues in the context of the continuous expansion of the Transformer generation model, and improves mechanical interpretability by reverse engineering the detailed calculations performed by the model. By understanding the internal structure that causes a Transformer model to produce its output, address current security issues more systematically, and predict security issues in future, more powerful models.

• Interpretability in the Wild: a Circuit for Indirect Object Identification in GPT-2 small

This paper demonstrates that mechanistic understanding of large machine learning models is feasible by explaining how GPT-2 small performs a natural language task called indirect object recognition (IOI) to bridge the gap in mechanistic interpretability performance in complex large models , which provides the opportunity for interpretability to extend to larger models and more complex tasks.

**Jan Leike:**Yes, the current exploration in the field of interpretability is very gratifying. I think more importantly, if we can use interpretability technology on a language model reward model, such as GPT-4 size Or any big model you can think of, and then got something about the reward model that we didn't know before, which is important because the reward model provides the training signal for a lot of RLHF training, so it's important to understand it better It is very valuable, and being able to flag or discover problems in the behavior it motivates that we humans do not want to appear will be a very important advancement. **

In this sense, I think interpretability is neither necessary nor sufficient. I think it's quite possible that we can solve the alignment problem purely behaviorally, without really understanding the internal model. But I also think that any non-trivial insight that we get from interpretability is going to be super useful, or could be super useful, because it gives us a way to attack. **

So it is completely impossible for us to give up the attempt at explainability. Because in a way, you have this artificial brain, and we have perfect brain scanners, we can completely zoom in and precisely measure the activation of every neuron on every forward pathway, including arbitrary, discrete timestamp, which is probably the maximum resolution we want to get. We can also make arbitrary interventions and perturb any value in the model at will. This gives us a lot of space and opportunity to experiment, and we'd be crazy not to take advantage of that.

But at the same time, the reason it's very difficult is because the model is learning how to compute in terms of efficiency, rather than being regularized to something human-understandable, or there's no reason to believe that a single neuron should correspond to a concept, or anything close to human think they are or should be or are familiar to us. In fact, empirically, neural networks represent many different concepts with a single neuron, and each concept is distributed among different neurons. So neurons are not important here.

There are two things I would focus on in terms of interpretability.

The first is causality. We want to look at neurons as we pass data through the model, for example we have a neuron related to "Canada" that fires when a concept related to Canada comes up. But this is only correlation, not necessarily causation. To verify that this is a causal relationship, we would then have to intentionally write about Canada-related concepts to see if they all respond, while also writing about other related concepts that might sound Canadian-related, or Nothing to do with Canada, but generally very similar, and then checking to see if the neurons react, or see if those neurons shut down, and so on.

*Daniel Filan: This is the same as Tolga Bolukbasi et al's ** An Interpretability Illusion for BERT **This paper, I think it is called Interpretability Illusion (Interpretability Illusion), the article mentions, We can make neurons respond to one specific thing, but it's just an illusion because on other datasets those neurons respond to a bunch of other things. **

An Interpretability Illusion for BERT:

The paper describes the "illusion of interpretability" that occurs when analyzing BERT models. The activations of individual neurons in a network may appear to encode a single, simple concept, when in fact they encode something much more complex, and the same effect applies to linear combinations of activations. The authors trace the source of this illusion to the geometric properties of BERT’s embedding space and the fact that ordinary text corpora represent only a small fraction of possible English sentences.

**Jan Leike:Another exciting thing is that OpenAI published an interpretable paper Language models can explain neurons in language models earlier this year ( Picking Note: ** in In this paper, the experimenters try to use GPT-4 to explain the behavior of GPT-2 neoron) What we want is a technique that can work at the level of detail of individual neurons, so that you can really ensure that you will not miss any detail while also being able to work at the scale of the entire model.

Because at the end of the day, everything in the model is related, so both are important. So far, technology has mostly been an alternative. There have been attempts at automatic interpretability before our paper, so we are not the first to do so. But I think if there can be some really detail-oriented interpretability work, some mechanistic interpretability methods that really try to understand the individual circuits or computational units inside the model, then the way to extend that to the entire model is to automate it, right?

But you can do this too: once you figure out how to do it in detail, well, you just document what you're doing, i.e. let an automatic alignment or an interpretability researcher detail to study what is happening with the model. Then, sift through the whole thing, or find a way to aggregate it. **I'm oversimplifying a bit here, but anyway, this is the idea that I'm very excited about.

So, in the paper, we have a lot of explanatory content. For example, this paper writes a natural language interpretation for a single neuron, which may not be entirely correct, but it gives you a simple example of what we can do here. The way it works is that you simply show GPT-4 a series of activation patterns, and then ask GPT-4 to write a suggested explanation.

Generally speaking, these explanations aren't very good, also because the task is so difficult and most neurons don't do things that humans can clearly understand. But we can run this program at the scale of each neuron in GPT-2 and throw away all explanations and try to figure out what the interesting patterns are. You can also look at scaling trends, like, "How do we automatically score these explanations as the model gets larger?" Or, "What if we add more computation, or make the model that does the explanations get bigger?" What happens to the quality of explanations? "

The cool thing is that we can automatically measure this metric using language models. While it's not a perfect measure and has a lot of problems, it can give you a proxy indicator of whether a human would think this explanation was good or not. You can then use this proxy at scale, running it on a large number of neurons.

**Daniel Filan: If you think about the necessary interpretability work, how much of it do you think is about figuring out a better basic unit of explanation versus figuring out how to extend what's going on? **

Jan Leike: I think you need both, the former is more difficult, which makes sense of course, and to be successful, I think you need to scale up.

Approach 3: Adversarial Testing

**Daniel Filan: The third path to achieve Superalignment is to deliberately train misaligned models and see if the pipeline can detect these models. So is OpenAI going to weed them out, or is it proactively fixing them? **

Jan Leike: The goal here is not to fix these deliberately trained misaligned models, in fact these wrong models are only used for detection.

Fundamentally, the core goal is that we need to be able to effectively distinguish between true alignment models that can help achieve our goals and make progress in alignment research, and false alignment models that have the potential to take over and infiltrate themselves. One way to help us better differentiate between these two types of aligners is to make a fake aligner and see if it resembles the real aligner. But you have to be very careful doing this because you're creating horrible stuff we all want to avoid.

**Daniel Filan: At the beginning of the OpenAI article, you mentioned that Superintelligence may be a technology that has the most profound impact on mankind and will help us solve the most important problems in the world. **

There is a saying about how great superintelligence is, and I wonder if it will lead to the loss of human power or even the extinction of humankind? Specifically, what kind of dislocation model are we talking about? How do we know they won't do those terrible things?

Jan Leike: A lot needs to be done to make the future development smooth. We need to have the right governance structures in place so that we can prevent the misuse of powerful AI systems, and we need to have precautions in place so that we avoid some sort of runaway effect that would still be detrimental to humanity. In this case, you have AI that is allied with humans and you have AI that is allied with anti-human corporations, and even if all the economic indicators or whatever looks good, the whole system can spiral out of control.

**Additionally, we also need to address technology alignment issues so that we can truly align our AI systems. **Superintelligence alignment focuses on only the last part of the risk. The technical problem we want to solve is how to make an AI system consistent with a set of human values, while at the same time deriving a new independent question, what should these values be? How do we design a process to import these values from society?

I think these are important questions that we need to answer. Only if we solve these problems can the future develop smoothly. I think the criterion for a good future is not just "AI doesn't kill everyone" but seeing humanity flourish and the future I aspire to is the future everyone wants to live in. The more powerful the AI, the greater the risks, and the greater the potential for errors if the system is misaligned.

In particular, from a dislocation perspective, I think the threat patterns we should be especially worried about are systemic lies, deceit, and self-infiltration.

**Daniel Filan: You talked about deliberately training misaligned models. What you mean here is training them to lie and sometimes try to jailbreak, but because the actual ability level of the model is low enough, is this enough to prevent and control risks? **

**Jan Leike: **Actually, you wouldn't want to train a model to be able to jailbreak, what if it actually does a good job at that? It would be safer to choose some other, more general proxy task, which will still help you make evaluating the model easier.

And then the core difficulty and the core goal is to really figure out what properties a system needs to have to pass the assessment. If you have a system that occasionally lies to you or deceives you, this can be seen as ineffective. Only when it does a continuous deception, a continuous lie, and convinces you, is it a truly coherent and credible liar. The way it lies is so consistent that you can hardly point out its lies or any inconsistencies in what it says through interrogation, which is truly terrifying.

In fact, it's not easy to be such a consistent liar. We try to make it difficult for models to become such consistent liars through scalable supervision, interpretability, and generality.

But it's not enough if we do that, and it's hard to determine how easily an AI can cheat consistently. One way to be sure is to first train the model to become a truly stable liar, and start from stability to see how difficult it is to achieve a coherent liar. How much do we need to trick the model? To what extent do we need to give the model hints, or teach it hands-on along the way? Having said that, you don't want to train a system that is truly dangerous, and if you actually run it and it successfully filters itself and instead directly causes the very thing you want to prevent, that would be really bad.

**Daniel Filan: When you say that, are you distinguishing between lying and other types of dangers, like, we can train it to lie, but we won't train it to murder? **

Jan Leike: Yes. For example, let's say you set a false goal for it. Maybe it's to convince humans to say a certain mantra, maybe it's to run a certain Python command, but in fact, this command doesn't do anything real, it just looks funny.

Also, we are concerned with how to do this a priori, and system stress testing is another matter entirely.

In the context of interpretability, our goal is simply to understand whether the currently trained system or existing systems are somehow close to our desired target system and can fool us in a coherent way. We want to determine whether the system will run a certain code or similar behavior without human supervision, which requires us to conduct experiments for specific scenarios. All we need is to verify "this is a coherent liar", otherwise it's really hard to determine a priori how close we are to this goal, except when we deliberately push forward, so that the system can do other outrageous things .

**Daniel Filan: So you train it to do some arbitrary little thing instead of the bad thing it's really supposed to do, like it has to lie, harm people, etc., but ultimately, it just puts a sticker on someone's forehead A note or something. **

Jan Leike: Exactly, like you hire someone to do a penetration test (Penetration_test), and all I have to do is go into the building and shake your hand, and then you say, "Yeah , it looks like you succeeded," or something like that. Or you say, "Can you steal this fake thing for me? I want to find out how secure we are." You can do that with no real consequences, but it still tells you a lot about security. information. I'm excited to do the same thing with alignment, stress testing your alignment system by training something specifically targeted to break and circumvent it, which is both very benign.

03.Superalignment Timetable

**Daniel Filan: OpenAI's goal is to solve the core technical challenges of Superalignment alignment within a 4-year period. What are the core technical challenges here? **

**Jan Leike: **This refers to how to make Superalignment consistent with human values. What we envision with Superalignment is a system that is much smarter than humans, can perform potentially much faster, and can work with many copies of itself, so it's a really powerful system.

We hope to achieve this within four years. The reason why I chose four years is that one is really ambitious, and the other is to make people believe that we can actually achieve this goal. At the same time, even if AI progresses very fast and the technology improves a lot in the next few years, we can still have something to do under this ambitious goal.

**A near-human-level automatic aligner is an instrumental goal we are pursuing, with the ultimate goal of figuring out how to align superintelligent agents, because we don't yet know how to do that. **

**Daniel Filan: To what extent do you think it can be achieved in 2 years? **

**Jan Leike:**If we push back from four years, I think generally we will be able to basically complete automatic alignment research in about three years, provided that some basic capabilities are already in place. If not, our project may take longer.

If it is within two years, we hope to have good control over this goal. Including what technologies are actually used, do we have such a combination of technologies, and whether we will have the confidence to have a trustworthy system that can not only be used frequently, but also be able to delegate a lot of work to it. At this point we'll want to break the problem down enough that it feels like the overwhelming workload right now is just engineering, in the sense that we're probably still two years away from solving the research problems associated with it .

Now that we have a timeline for a four-year goal, it is clear that advancements in AI capabilities are tied to that deadline. If progress slows down, we may not have a model that is really useful for alignment research tasks. But if in four years we find that the model is still not good enough, that also means we have more time to actually solve the problem because the problem is not as urgent.

On the other hand, artificial intelligence may advance faster, and humans may welcome the arrival of superintelligence faster. At that point, we have to adjust our plans accordingly. Therefore, we chose four years as a time frame that was both realistic and gave us enough urgency to solve problems quickly.

**Daniel Filan: Assuming that in terms of AI capability research, the progress is about the same as expected. Four years later, you guys have all the capabilities to be a good auto-alignment researcher, but interpretability is harder than we thought, or scalable supervision is harder than we thought, so you haven't achieved superalignment yet what to do ? **

Jan Leike: First of all we have to tell the public that we have not achieved the goal, but we will be responsible for this goal. What happens next after the target fails depends on the general state of the world at the time. Can we somehow buy ourselves more time, or is our general thinking wrong, should we switch directions, etc.? Many things can happen.

But in fact, in my opinion, alignment is actually very easy to solve. There are many good ideas that just need to be rigorously tried and measured, in which the model can really learn something and improve a lot. Over the past two years I have become more optimistic and I think this is a very realistic goal. Even if I'm wrong, even if the problem is much harder than we think, it's still very useful to try. There is now a lot of disagreement about how difficult this problem is, but more importantly, how consistent a system is measured in practice.

**One of the things that worries me the most is not that our systems are not unified enough, but that we actually don't really know how unified they are. **Experts may have their own opinions in this situation, and it is very easy and scary if everyone agrees that the system is not coordinated enough that the model cannot be deployed. In addition, we also need to face enormous commercial pressure.

People are paying close attention to the deployment time, but experts can only postpone it indefinitely without finding a definite reason. This situation is really worrying. The business pressure will only increase. On the one hand, you are very confident, but you are not sure. I would very much like to avoid that, and the immediate way to avoid that is for us to get really good at measuring how well the systems actually fit together, and that's where the broader technology portfolio really helps.

Daniel Filan: In Governance of superintelligence, Planning for AGI and beyond **In these articles, OpenAI mentioned the independent audit (audit) for AI systems to ensure the implementation of AI Safety. As expected, to what extent can the Superalignment Team research something useful for model auditing? **

**Jan Leike: **If it goes well, the technology we have developed can be used in "model auditing". For example, if we can make some progress on interpretability, then any technique we come up with could be used by reviewers as part of their review work; or, some kind of scalable supervision as part of the review would be possible. But the Superalignment Team is actually not suitable for auditing because we are not independent from OpenAI. In my opinion, the audit must be completely independent of the auditee, which is why I pay close attention to the matter of "independent auditors".

The core task of our team is not to convince ourselves that the system we are building is correct and safe, because it is very simple to convince ourselves of various things. What we have to do is to convince the entire academic community or groups that focus on AI Safety to believe in the model. is safe. This requires not only researching the technology we are going to use and showing it to others after providing evidence that the system is what we think it is, but also an independent assessment of all of the above.

04.Generalization

**Daniel Filan: In the footnotes of the Introducing Superalignment article, you mentioned: The favorable assumptions people have made so far may be broken. One assumption is that generalization is benign. How do you view the issue of generalization? **

Jan Leike: We recently established a generalization team headed by Collin Burns.

**The question we face is: how to understand and improve the generalization ability of the model? How to make the model generalize from simple tasks that can be supervised to tasks that are difficult to supervise? This problem is actually complementary to scalable supervision. In Scalable Supervision, we focus on enhancing the ability of humans to evaluate what the system is doing. If we think about recursive reward modeling, the question is “Can we use a recursively evaluated AI assistant to recursively evaluate everything the AI does?”. **

What I really like about it is that it really puts the human in the loop, front and center, and observing everything that the AI system is doing. Of course, in practice, you can't really do this because the AI system does a lot, but you can observe it all through small independent probabilities. But in this way, we still don’t know whether the model generalizes to situations we are not paying attention to.

So the way I've thought about this in the past is generally, you just make sure your model is mostly iid generalization, i.e. the task we're working on has the same distribution as the task we're not working on.

Independent and identically distributed generalization:

The generalization ability of the model is the performance of the model in the test set (in which the data model has not been seen before), that is, the model's ability to draw inferences from one example. Independent and identically distributed generalization means that these numbers must satisfy iid (independent and identically distributed) and be in the same distribution.

**Daniel Filan: You mentioned in one of your personal blogs that you don't intend to rely on generalization at all, just continue to train and continue to do iid. **

Jan Leike: Yeah, at least my initial thought was that I didn't want to rely on non-IID generalization because that doesn't work well in neural networks and it's not well understood.

But the new question is: "What if we actually understand it? What if we can actually say what the generalization means?" I think that's a very good question. Ilya also mentions this often. So, what we want to know is, can we still find the meaning of model generalization for things that are not supervised, even if they are not iid? Does it generalize in a way that humans intend? So, we can now investigate this question empirically through well-designed experiments.

We have been studying how to split existing data sets into easy and hard problems, where easy problems are defined as problems that small models can solve. We then try to understand or improve the accuracy of large models on the entire dataset. This is a very interesting topic as it provides a completely new backbone for training and validation techniques. For example, if the model works so well that we can supervise the reward model on some easy and confident evaluation tasks, or make the model generalize to more difficult problems, then we can complete the model generalization.

And then there's this reward model that, even without supervision, generalizes to harder tasks in the way we want, and can be trained with it. But we still have no way of knowing if it's actually aligned now. However, you can leverage scalable supervision and interpretability to validate these techniques. Or the other way around, suppose we train our auto-aligner with scalable supervision, and then use generalization as a validation technique, i.e. we generalize to the probability of the true answer based on the best knowledge of the model.

We then ask, is there a small flaw here? Is there a Trojan written in the scalable supervised alignment model in this code? Now we can do advanced cross-validation. We can train two different models: one trained with generalization techniques and the other with scalable supervision techniques, and now we can have them cross-validate each other's answers and check that these models are fundamentally the same? If different, what are their important differences?

**Daniel Filan: By "training through generalization techniques" here, does it mean training on simple problems and then generalizing to difficult problems, or something else? **

Jan Leike: If you understand how your model generalizes from easy to hard, you can make it generalize very well, and its accuracy will be basically the same as if you trained it on the hard problem Just as good. Now, you can use this as a reward model, or as "Which answer would I prefer if I actually knew what was going on here?"

**Daniel Filan: Regarding non-IID generalization, why do we need to know the internal structure of the model? Is it because you want to know what it will do if you haven't checked? What is the connection between these two questions? **

Jan Leike: To some extent, the questions they want to answer overlap: what does the model do in the absence of a distribution? At least they have two distinct paths to answer.

To perform cross-validation, the training set must be split differently. What I mean by cross-validation here is that in one training run, you train using a generalization method and then validate using interpretability, scalable supervision, and other techniques. Then in the second training, it is trained using scalable supervised methods and validated using generalization methods, interpretability and other methods. This way, you get two independent attempts at the problem.

**Daniel Filan: Yes, I mean cross-validation in a very broad sense of "things validating each other in a cross-wise manner." **

Jan Leike: I think the best case scenario is that they are actually complementary rather than doing the same thing. If you can understand or improve how a model generalizes, then you have ways to leverage the model's internal structure to best accomplish what you want to do. Let's say you're trying to extract the model's best perception of what the world is really like, which is very hard for RLHF because people prioritize things that sound real, so RLHF reinforces what humans think is real . So you're actually training the model to tell you what you want to hear or what you believe, but that may not be what the model knows. But generalization techniques give you a way to extract these, although we haven't really proven what is optimal for a model to know.

However, if you have really good interpretability tools, you can hopefully do something like that, try to figure out the cognitive, internal structure or whatever of the model from the internal structure. But fundamentally, it can be harder because you never know if this is the best cognition the model can produce, or the cognition of someone the model is simulating. There is an assumption that a pre-trained language model is just a collection of different characters, and you might extract the cognition of a character or a group of characters.

**Daniel Filan: Then you need some kind of causal model from so-called cognition to output. **

Jan Leike: That’s right. I think this kind of application is actually quite natural in terms of interpretability. Like a lie detector, or uncovering evidence of deception in a model, a secret conspiracy to overthrow humanity, interpretability research can lead to patterns of “knowledge extraction.” Knowledge extraction that generalizes in the same way is much more difficult.

**Daniel Filan: For generalization, you have to choose the generalization distribution. And our hope is that maybe interpretability can tell you something, like, does it have or doesn't have a lying kernel, and even if it does, it's only unraveled here. **

Jan Leike: Right. This is also a very interesting machine learning problem: how do neural networks generalize outside the i.i.d. setting? In what ways do they generalize naturally, and where do they not? For example, in the InstructGPT paper, one of the things we found was that even though our fine-tuning dataset was almost entirely in English, the model was also very good at following instructions in languages other than English. But sometimes it does something weird: ask it to use another language, say, to write an abstract in German, and it writes it in English. In general, the model understands perfectly which language it speaks, but that doesn't necessarily mean it has to follow German instructions. Fundamentally, it generalizes instructions across languages.

But we don't know why it works that way. This has happened many times. There are also intuitive reasons for this. Humans generalize across languages, but I wanted to know the mechanism of generalization within the model, or generalization to following instructions and code.