More

- Topic1/3

15k Popularity

8k Popularity

16k Popularity

7k Popularity

2k Popularity

- Pin

- 🎊 ETH Deposit & Trading Carnival Kicks Off!

Join the Trading Volume & Net Deposit Leaderboards to win from a 20 ETH prize pool

🚀 Climb the ranks and claim your ETH reward: https://www.gate.com/campaigns/site/200

💥 Tiered Prize Pool – Higher total volume unlocks bigger rewards

Learn more: https://www.gate.com/announcements/article/46166

- 📢 ETH Heading for $4800? Have Your Say! Show Off on Gate Square & Win 0.1 ETH!

The next bull market prophet could be you! Want your insights to hit the Square trending list and earn ETH rewards? Now’s your chance!

💰 0.1 ETH to be shared between 5 top Square posts + 5 top X (Twitter) posts by views!

🎮 How to Join – Zero Barriers, ETH Up for Grabs!

1.Join the Hot Topic Debate!

Post in Gate Square or under ETH chart with #ETH Hits 4800# and #ETH# . Share your thoughts on:

Can ETH break $4800?

Why are you bullish on ETH?

What's your ETH holding strategy?

Will ETH lead the next bull run?

Or any o

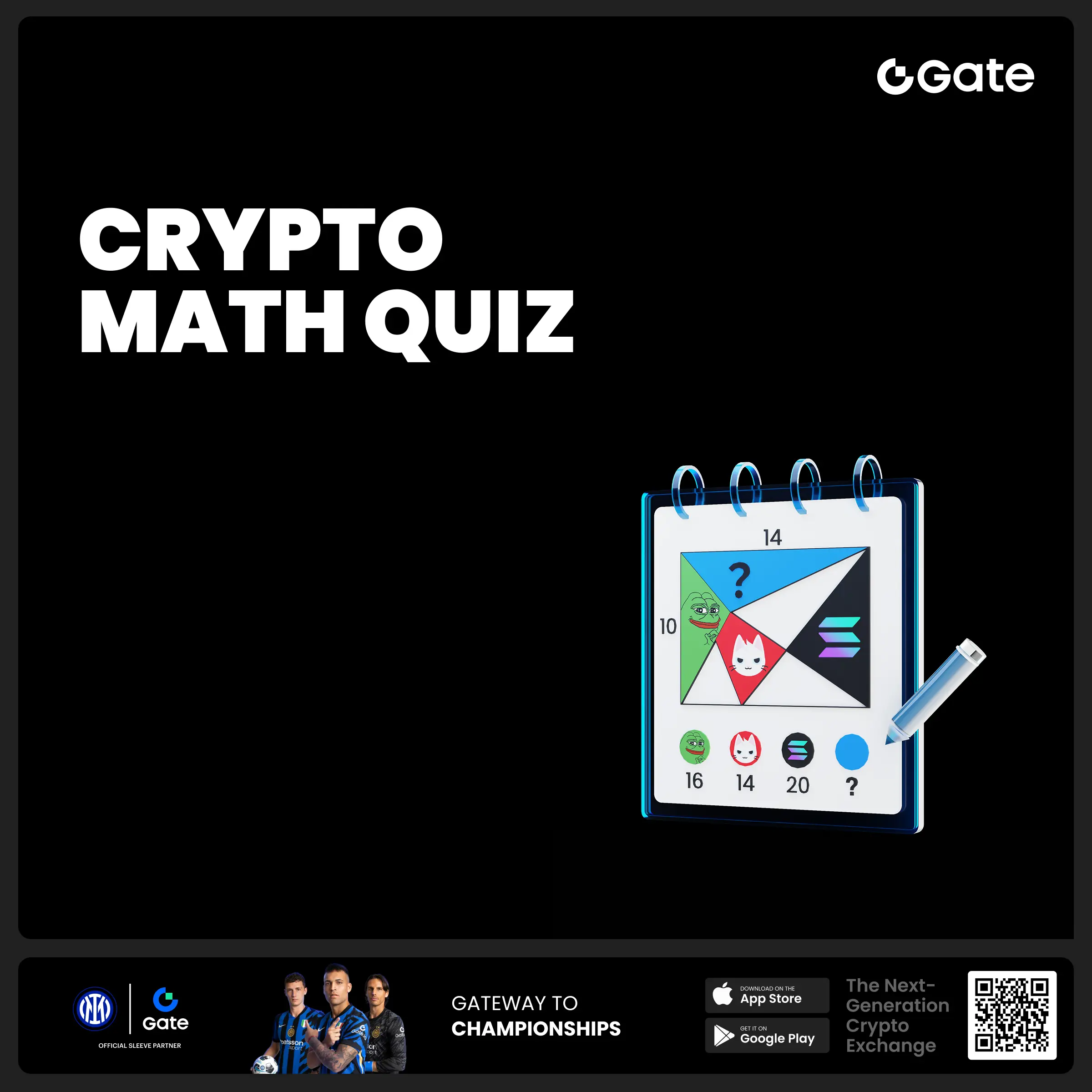

- 🧠 #GateGiveaway# - Crypto Math Challenge!

💰 $10 Futures Voucher * 4 winners

To join:

1️⃣ Follow Gate_Square

2️⃣ Like this post

3️⃣ Drop your answer in the comments

📅 Ends at 4:00 AM July 22 (UTC)

- 🎉 [Gate 30 Million Milestone] Share Your Gate Moment & Win Exclusive Gifts!

Gate has surpassed 30M users worldwide — not just a number, but a journey we've built together.

Remember the thrill of opening your first account, or the Gate merch that’s been part of your daily life?

📸 Join the #MyGateMoment# campaign!

Share your story on Gate Square, and embrace the next 30 million together!

✅ How to Participate:

1️⃣ Post a photo or video with Gate elements

2️⃣ Add #MyGateMoment# and share your story, wishes, or thoughts

3️⃣ Share your post on Twitter (X) — top 10 views will get extra rewards!

👉

The large-scale domestic voice dialogue model is here: Li Kaifu participates in all things, bilingual and multi-modal in Chinese and English, open source and commercially available

Source: Qubit

The first Chinese-English bilingual voice dialogue open source model is here!

In the past few days, a paper on speech-text multimodal large-scale models appeared on arXiv, and the name of 01.ai, a large-scale model company owned by Kai-Fu Lee, appeared in the signed company.

Supports text and voice input, can also be played on mobile phones

According to the researchers, LLaSM is the first open source and commercially available dialogue model that supports Chinese and English bilingual speech-text multi-modal dialogue.

So, let’s take a look at its voice text input and Chinese and English bilingual capabilities.

First of all, let’s have a Chinese-English cultural collision, let him comment on Li Bai in English:

It can be seen that the model gave a very neutral evaluation after thinking for a while, and also has the basic "common sense of handling water" of the large model (manual dog head)

Let's try typing "Suggest me a recipe" with voice:

It can be seen that the model accurately outputs a recipe of "Eggplant Cheese", but I don't know if it is good or not.

However, when we tried it, we also found that this model sometimes had bugs.

For example, sometimes it doesn't "understand human speech" very well.

When asked to output mixed Chinese and English content, it will pretend not to understand and output English:

But separately, its ability to express both Chinese and English is pretty good.

So, how is such a model realized?

**What new model did you make? **

Judging from the trial play, LLaSM has two main features: One supports Chinese and English input, and the other supports dual voice and text input.

To achieve these two points, some adjustments need to be made in the architecture and training data respectively.

Architecturally, LLaSM integrates the current speech recognition model and the large language model.

LLaSM consists of three parts, including the automatic speech recognition model Whisper, the modal adapter and the large model LLaMA.

Among them, Whisper is responsible for receiving the original speech input and outputting the vector representation of speech features; the modality adapter is responsible for aligning speech and text embedding; LLaMA is responsible for understanding speech and text input instructions and generating responses.

On the training data, the researchers compiled a data set LLaSM-Audio-Instructions containing 199,000 dialogues and 508,000 speech-text samples.

Among the 508,000 speech-text samples, there are 80,000 Chinese speech samples and 428,000 English speech samples.

Researchers mainly use text-to-speech technology to generate voice packets for these data sets based on data sets such as WizardLM, ShareGPT and GPT-4-LLM, while filtering out invalid conversations.

However, the paper does not yet compare its output effects with other speech models or text models.

about the author

This paper is from LinkSoul.AI, Peking University and 01Wanwu.

The co-authors Yu Shu and Siwei Dong are both from LinkSoul.AI, and previously worked at Beijing Zhiyuan Artificial Intelligence Research Institute.

LinkSoul.AI is an AI start-up company that has previously launched the first open source Llama 2 large Chinese language model.

Demo site: