- Topic1/3

886 Popularity

23k Popularity

5k Popularity

4k Popularity

170k Popularity

- Pin

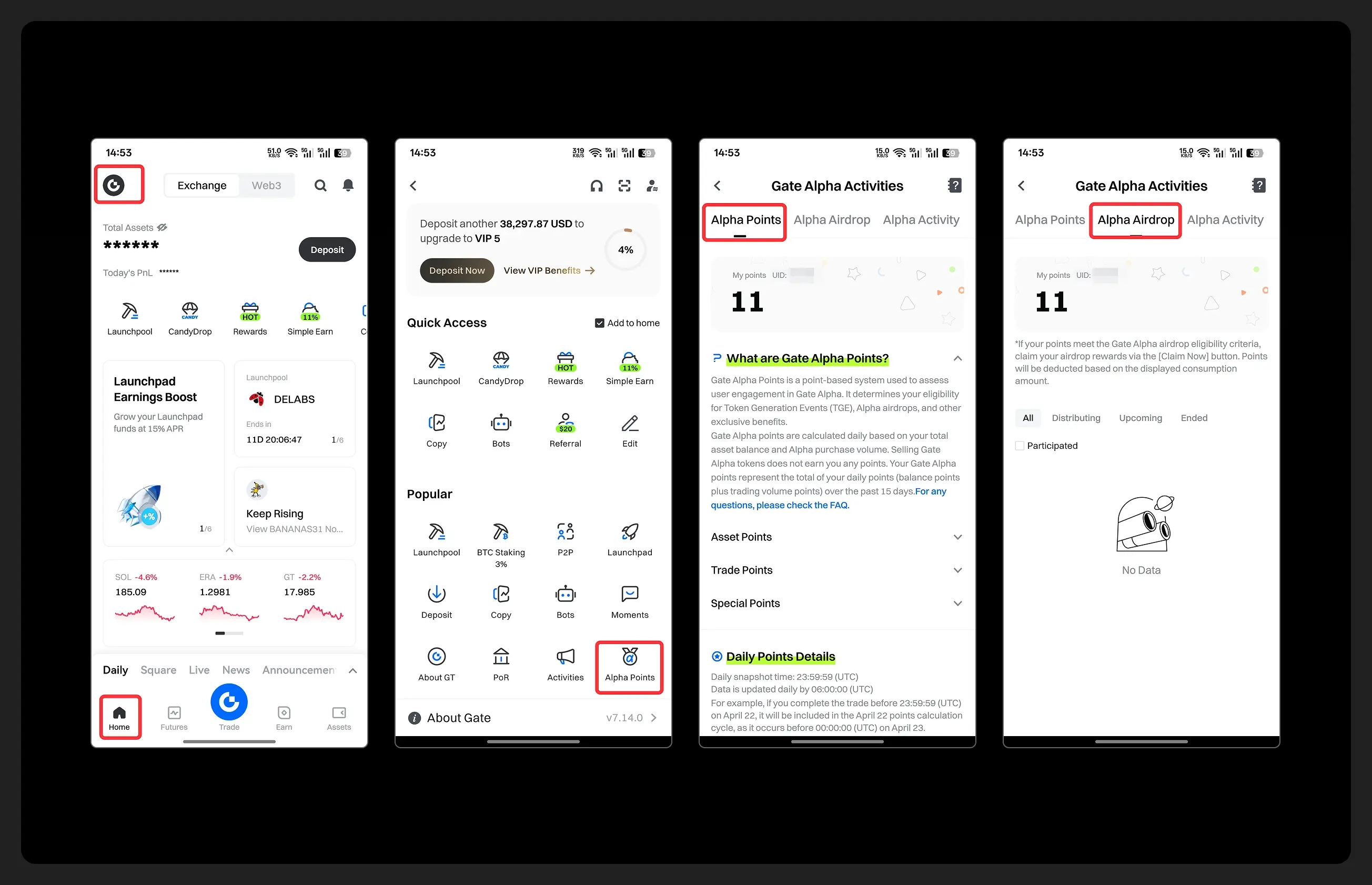

- Hey fam—did you join yesterday’s [Show Your Alpha Points] event? Still not sure how to post your screenshot? No worries, here’s a super easy guide to help you win your share of the $200 mystery box prize!

📸 posting guide:

1️⃣ Open app and tap your [Avatar] on the homepage

2️⃣ Go to [Alpha Points] in the sidebar

3️⃣ You’ll see your latest points and airdrop status on this page!

👇 Step-by-step images attached—save it for later so you can post anytime!

🎁 Post your screenshot now with #ShowMyAlphaPoints# for a chance to win a share of $200 in prizes!

⚡ Airdrop reminder: Gate Alpha ES airdrop is

- Gate Futures Trading Incentive Program is Live! Zero Barries to Share 50,000 ERA

Start trading and earn rewards — the more you trade, the more you earn!

New users enjoy a 20% bonus!

Join now:https://www.gate.com/campaigns/1692?pid=X&ch=NGhnNGTf

Event details: https://www.gate.com/announcements/article/46429

- Hey Square fam! How many Alpha points have you racked up lately?

Did you get your airdrop? We’ve also got extra perks for you on Gate Square!

🎁 Show off your Alpha points gains, and you’ll get a shot at a $200U Mystery Box reward!

🥇 1 user with the highest points screenshot → $100U Mystery Box

✨ Top 5 sharers with quality posts → $20U Mystery Box each

📍【How to Join】

1️⃣ Make a post with the hashtag #ShowMyAlphaPoints#

2️⃣ Share a screenshot of your Alpha points, plus a one-liner: “I earned ____ with Gate Alpha. So worth it!”

👉 Bonus: Share your tips for earning points, redemption experienc

- 🎉 The #CandyDrop Futures Challenge is live — join now to share a 6 BTC prize pool!

📢 Post your futures trading experience on Gate Square with the event hashtag — $25 × 20 rewards are waiting!

🎁 $500 in futures trial vouchers up for grabs — 20 standout posts will win!

📅 Event Period: August 1, 2025, 15:00 – August 15, 2025, 19:00 (UTC+8)

👉 Event Link: https://www.gate.com/candy-drop/detail/BTC-98

Dare to trade. Dare to win.

Marcus reviews GPT-5! A new paradigm is urgently needed, and OpenAI has no advantage

Original source: New Zhiyuan

The news about GPT-5 has recently gone viral again.

From the very beginning of the revelation that OpenAI was secretly training GPT-5, to the later clarification by Sam Altman; Later, when it came to how many H100 GPUs were needed to train GPT-5, DeepMind's CEO Suleyman interviewed OpenAI, who was secretly training GPT-5.

And then there was a new round of speculation.

Interspersed with Altman's bold prediction that GPT-10 will appear before 2030, more than the sum of all human intelligence, is a real AGI cloud.

and then to the recent OpenAI multimodal model called Gobi, and the strong call for Google's Gimini model, the competition between the two giants is about to start.

For a while, the latest progress in large language models has become the hottest topic in the circle.

To paraphrase an ancient poem, "still holding the pipa and half-covering the face" to describe it, it is quite appropriate. I just don't know when I can really "come out after a long time".

Timeline Recap

What we are going to talk about today is directly related to GPT-5, and it is an analysis by our old friend Gary Marcus.

The core point is just one sentence: GPT-4 to 5 is not as simple as expanding the size of the model, but a change in the entire AI paradigm. And from this point of view, OpenAI, which developed GPT-4, is not necessarily the company that reached 5 first.

In other words, when the paradigm needs to change, the previous accumulation is not very transferable.

But before we get into Marcus's point of view, let's briefly review what happened to the legendary GPT-5 recently and what the public opinion field has said.

At first, Karpathy, the co-founder of OpenAI, tweeted that H100 is a hot topic sought after by giants, and everyone cares about who has this thing and how many there are.

GPT-4 may have been trained on about 10,000-25,000 A100s

Meta about 21000 A100

Tesla about 7000 A100

Stability AI is about 5000 A100

The Falcon-40B was trained on 384 A100

Regarding this, Musk also participated in the discussion, according to Musk, GPT-5 training may take between 30,000 and 50,000 H100.

Previously, Morgan Stanley also said a similar prediction, but the overall number is a little less than Musk said, about 25,000 GPUs.

Of course, this wave of putting GPT-5 on the table to talk, Sam Altman must come out to refute the rumors, indicating that OpenAI is not training GPT-5.

Some bold netizens speculated that the reason why OpenAI denied it was probably just changed the name of the next-generation model, not GPT-5.

The craving for GPUs across the industry is the same. According to statistics, the total number of GPUs required by all tech giants must be more than 430,000. That's an astronomical amount of money, almost $15 billion.

But it was a bit too roundabout to push GPT-5 backwards through the amount of GPU, so Suleyman, the founder of DeepMind, directly "hammered" in an interview, saying that OpenAI was secretly training GPT-5, don't hide it.

Of course, in the full interview, Suleyman also talked about a lot of big gossip in the industry, such as why DeepMind is lagging behind in the competition with OpenAI, obviously it doesn't lag too much in time.

There was also a lot of inside information, such as what happened when Google acquired it at the time. But these have little to do with GPT-5, and interested friends can find out for themselves.

All in all, this wave is the latest progress of GPT-5 by industry bigwigs, which makes everyone suspicious.

After that, Sam Altman said in a one-on-one connection, "I think before 2030, AGI will appear, called GPT-10, which is more than the sum of all human intelligence."

In this connection, Altman envisioned a lot of future scenarios. For example, how does he understand AGI, when will AGI appear, what will OpenAI do if AGI really appears, and what should all mankind do.

But in terms of actual progress, Altman plans it this way, "I told the people in the company that our goal was to improve the performance of our prototypes by 10 percent every 12 months."

"If you set that target at 20%, it might be a bit too high."

The most valuable is the following - OpenAI's Gobi multimodal model.

The focus is on the white-hot competition between Google and OpenAI, at what stage.

Before talking about Gobi, we have to talk about GPT-vision. This generation of models is very powerful. Take a sketch photo, send it directly to GPT, and the website will make it for you in minutes.

Not to mention writing code.

Unlike GPT-4, Gobi was built from the ground up on a multimodal model.

This also aroused the interest of the onlookers - is Gobi the legendary GPT-5?

Suleyman is adamant that Sam Altman may not be telling the truth when he recently said that they didn't train GPT-5.

Marcus View

To begin by saying that it's quite possible that no pre-release product in the history of technology (with the possible exception of the iPhone) has been expected more than GPT-5.

It's not just because consumers are enthusiastic about it, it's not just because a whole bunch of companies are planning to start from scratch around it, and even some foreign policy is built around GPT-5.

In addition, the advent of GPT-5 could also exacerbate the chip wars that have just escalated further.

Marcus said there are also people who specifically target GPT-5's expected scale model and ask it to suspend production.

Of course, there are quite a few people who are very optimistic, and some who imagine that GPT-5 may eliminate, or at least greatly dispel, many of the concerns that people have about existing models, such as their unreliability, their biased tendencies, and their tendency to pour authoritative nonsense.

But Marcus believes that it was never clear to him whether simply building a larger model would actually solve these problems.

Today, some foreign media broke the news that another project of OpenAI, Arrakis, aims to make smaller and more efficient models, but was canceled by the top management because it did not meet the expected goals.

Marcus said that almost all of us thought that GPT-4 would launch GPT-5 as soon as possible, and that GPT-5 is often imagined to be much more powerful than GPT-4, so Sam surprised everyone when he denied it.

There's been a lot of speculation about this, such as the GPU issues mentioned above, and OpenAI may not have enough cash on hand to train these models (which are notoriously expensive to train).

But then again, OpenAI is barely as well-funded as any startup. For a company that has just raised $10 billion, even $500 million in training is not impossible.

Another way to put it is that OpenAI realizes that the costs of training a model or running it will be very high, and they are not sure if they can make a profit at those costs.

That seems to make some sense.

The third argument, and Marcus's opinion, is that OpenAI had already done some proof-of-concept tests during Altman's presentation in May, but they weren't happy with the results.

In the end, they may conclude that if GPT-5 is just an enlarged version of GPT-4, then it will not meet expectations and fall far short of the preset goals.

If the results are only disappointing or even a joke, then training GPT-5 is not worth spending hundreds of millions of dollars.

In fact, LeCun is thinking the same way.

GPT goes from 4 to 5, which is more than just 4plus. 4 to 5 should be the epoch-making kind.

What is needed here is a new paradigm, not just scaling up the model.

So, in terms of paradigm change, of course, the richer the company, the more likely it is to achieve this goal. But the difference is that it doesn't have to be OpenAI anymore. Because the paradigm change is a new track, the past experience or accumulation may not be of much use.

Similarly, from an economic point of view, if it is true as Marcus suggests, then the development of GPT-5 is equivalent to being postponed indefinitely. No one knows when the new technology will arrive.

It's like that now new energy vehicles generally have a range of hundreds of kilometers, and if you want to last thousands of miles, you need new battery technology. In addition to experience and capital, it may also take a little luck and chance to break through new technologies.

But in any case, if Marcus is right, then the commercial value of GPT-5 will surely shrink a lot in the future.

Resources: